2016 Hugo Prediction 1.0

The 2016 Hugo Best Novel is going to be extremely unpredictable. We know that it’s going to attract enormous attention—just think of how many posts were published about the 2015 Hugos—and that it’s going to be controversial.

The difficulty in predicting the 2016 Hugo lies in how little information we have: how big will the Rabid Puppies vote be? How will the Sad Puppies 4 operate? How much will the rest of the Hugo vote increase? Will other Hugo voters change their voting habits to stop a Puppy sweep? Will specific authors turn down endorsements and/or nominations? Earlier, I anticipated a year-to-year nominating vote increase of at least 1.8x, and that could wind up much higher depending on how broadly discussed the nominations are. The kind of predictive methods I use at Chaos Horizon (data-mining) react to such massive changes very poorly. As such, my goal is to begin developing a broad picture and then refine that as more data becomes available.

So, while I listed my prediction in order from #1-#15, I think any of the works from #1-#10 have a strong chance of grabbing an eventual nomination. Remember, I predict what I think is likely to happen, not what should happen, and that my predictions are based on past Hugo patterns and a variety of data lists I collate and track. Opinions are mine alone, and this should be used as a starting place for discussion, nothing more. Have fun with the chaos!

Anyone can vote in the Hugo awards, provided you pay the supporting membership fee ($50 this year, I believe). EDIT 1/1/16: Remember, anyone who was a member of last year’s WorldCon (Sasquan) can also vote in this year’s nomination stage. So that means everyone who was part of last year’s kerfuffle has another vote. You do have to join this year’s WorldCon to vote in the final stage, however.

Here’s the Hugo info on the voting process. MidAmeriCon has stated Hugo voting will start in early January this year.

Last year, the nominations came out on April 4, 2015. The Hugos nominate 5 works per category unless there are ties.

1. Uprooted, Naomi Novik: Novik and Stephenson are pretty interchangeable at the top. These books are just so much more popular than every other contender this year that it’s hard to picture them not grabbing nominations. Novik has a prior Hugo nomination, a front-running Nebula status, and strong placement on whatever popular votes we see out there, including the Sad Puppies themselves. Combine all of that overlapping support, and I think Novik’s fairy-tale inflected Fantasy novel has a strong chance of getting nominated (and eventually winning) this year’s Hugo.

2. Seveneves, Neal Stephenson: Stephenson is another author who does well across all sectors of the Hugo voters. Prior nominations for massive books like Anathem and Cryptonomicon show that Hugo voters aren’t turned away by Stephenson’s length or complexity. The Hugo still leans towards Science Fiction, and this was one the biggest SF books of the year. It shows up well on a variety of lists, including Sad Puppies 4, and that broad support should drive it to a nomination. There is some dislike of this book out there (it splits into two very different parts), but dislike doesn’t really impact the nomination stage, only the final vote.

3. Rabid/Sad Puppy Overlap Nominee: Before Correia and Kloos declined their nominations in 2015, the Sad/Rabid overlaps (i.e. appeared on both lists) took 4 of the top 5 Hugo slots. While we won’t know what these overlaps will be until the Rabid Puppies announce their slate, we can predict that they’ll grab several tops spots. Based on my early Sad Puppy census, I’m currently thinking this overlap could be something like Jim Butcher’s Aeronaut’s Windlass, John C. Wright’s Somewhither, or Michael Z. Williamson’s A Long Time Until Now. Of those three, Butcher would place highest because of his massive popularity. More popularity = more potential voters.

4. Ancillary Mercy, Ann Leckie: Leckie broke up the Puppy sweep last year with the middle volume of her well-liked trilogy; this final volume was received as a fitting end to a series that has already won a Hugo and Nebula. This series is one of the most talked (and nominated) SF publications of recent years.

5. Rabid/Sad Puppy Overlap Nominee: The less popular/mainstream book that the Rabid/Sad Puppies overlap on could land here. A John C. Wright or Michael Z. Williamson just has so many fewer readers (thus fewer votes) than a Bucther. Based on last year’s number, you would still anticipate a Top #5 placement for this overlap, although we won’t know the exact numbers/impact of the Sad Puppies and Rabid Puppies until the nominations come out.

6. The Fifth Season, N.K. Jemisin: The 2015 Hugo was very close. Spots #3-#11 were separated by only 100 votes. If we have 1000+ new voters, any of these #3-#11 spots could shuffle. I have Jemisin high because of the strong critical reception of this book, her previous Hugo and Nebula nominations, likely Nebula nomination this year, and her increased visibility in the field (she now has a regular NY Times Book Review column). The Fifth Season also fits the mold of The Goblin Emperor, as a sort of twist/revisioning of secondary world fantasy. The fantasy side of the Hugos has been driving quite a few nominations/wins lately: think about Graveyard Book, Norrell & Strange, or Among Others.

7. Rabid Puppy Nominee: This is a wildcard. Last year, when the Sad/Rabid puppies separated, they fell below 3 non-Puppy picks (Leckie, Addison, and Liu). Would the same happen this year? I’ve got no idea or even suggestion of what this book might be; we’ll have to wait and see. This would be the truest measure/test of the Rabid Puppies voting strength. Even a slight rise of the Rabid Puppy numbers could push this up 2, 3, or more slots. Depending on how often the Rabid/Sad Puppies overlap, you may have to add more Rabid Puppy nominee slots in at about this point.

8. Sad Puppy Nominee: The longer SP4 list will dilute their vote somewhat, so I expect their solo picks to place below the Rabid puppies. In the similar spot last year, they clocked in with 199 votes for Trial by Fire, although Gannon’s vote total was doubtless helped by his Nebula nomination.

9. Aurora, Kim Stanley Robinson: The next three are basically interchangeable in this prediction, all belonging to the category of SF books by past Hugo winners. Aurora is a tale of a multi-generational ship and planetary colonization, and is almost the opposite of Sevenves in terms of its approach, characterization, and philosophy. SF voters looking for an alternative to Stephenson—or even just a book to round out their ballots—might go in this direction.

10. The Dark Forest, Cixin Liu: Normally last year’s Hugo winner would be higher, but I’m not seeing the buzz for Liu you would expect. Cixin Liu himself commented on Chinese voters potential driving this book to a nomination by saying, “That’s the best way to destroy The Three-Body Trilogy. And not just this sci-fi work, but also the reputation of Chinese sci-fi fans. The entire number of voters for the Hugo Awards is only around 5,000. That means it is easily influenced by malicious voting. Organizing 2,000 people to each spend $14 is not hard, but I am strongly against such misbehavior. If that really does happen, I will follow the example of Marko Kloos, who withdrew from the shortlist after discovering the ‘Rabid Puppies’ had asked voters to support him.”

11. The Water Knife, Paolo Bacigalupi: Bacigalupi is under the radar going into the 2016 awards season, but The Water Knife was a well-reviewed SF novel, his first since the Hugo and Nebula winning The Windup Girl, with many of the same eco-SF themes Bacigalupi is acclaimed for. Can it cut through the noise of this year’s Hugo controversies? If this shows up on a lot of the other awards, it could move up the Hugo list.

12. Nebula Nominee (Grace of Kings by Ken Liu, The Traitor Baru Cormorant by Seth Dickinson, Karen Memory by Elizabeth Bear, etc.): The Nebulas have exerted considerable influence on the Hugos over the past few years. The increased visibility of the Hugo nominees can springboard a book to a Hugo nomination; this seemed to have helped both The Goblin Emperor and The Three-Body Problem last year. I’ll keep an eye on who gets Nebula noms, and then boost them in my Hugo predictions.

13. The Shepherd’s Crown, Terry Pratchett: Pratchett is going to be a sentimental favorite going into 2016. I think some people will try to nominate Discworld as a whole, which will split the Pratchett vote. Even if Pratchett is nominated, I suspect his estate would turn it down, following the precedent established by Pratchett turning down his Hugo nomination for Going Postal.

14. Nemesis Games, James S.A. Corey: I may be too high with this, but I think The Expanse TV series is going to revitalize Corey’s Hugo chances over time. The big impact may be felt next year, particularly if we have Hugo rule changes.

15. The Just City, Jo Walton: Walton’s a stealth candidate—she missed last year’s ballot by only 90 votes, and The Just City is a little more accessible and well-liked than My Real Children. Walton still has a lot of good will (and readers!) as a result of the Hugo and Nebula winning Among Others. I don’t expect a nom, but it should get some votes.

Scalzi’s not on the list because of this post saying he’s sitting out the 2015 awards. Brandon Sanderson just missed because Shadows of Self is #2 in a series; he’s an author that could greatly benefit from Hugo rule changes (huge fanbase). Darker Shade of Magic by V.E. Schwab has a huge Goodreads following, but isn’t showing up as popular in other places. Mira Grant had a run of numerous best Novel noms earlier this decade, so she might be hanging around the Top #15. Her current series isn’t a popular as her earlier zombie series, though. Charles Stross tends to get nominated for his SF, not The Laundry Files, so that’s why he isn’t in the Top #15 for Annihilation Score. Anyone else who seems an obvious contender that I missed?

Also remember that January is very early. Three Body-Problem and Ancillary Justice, the last two Hugo winners, just started picking up steam about now. As we see more year-end lists and the beginning of the 2016 Award nominations, the picture should snap into sharper focus. I’ll update my prediction on the first of the month in February, March, and April.

A Best Saga Hugo: An Imagined Winner’s List, 2005-2014

The proposed Best Saga Hugo has been generating a lot of debate over the past few days. Check this MetaFilter round-up for plenty of opinions. I find it hard to think of awards in the abstract, so I’ve been generating potential lists of nominees. Today, I’ll try doing winners. Check out my Part 1 and Part 2 posts for some models of what might be nominated, based on the Locus Awards and Goodreads.

Today, I’m engaging in a thought experiment. What if the a Best Saga Hugo had begun in 2005? I’ll use the 400,000 already 300,000 word count rule of the proposal (although it might get lowered in the final proposal). You have to publish a volume in your series to be eligible that year. I’m adding one more limiter, that of not winning the Best Saga Hugo twice. Under those conditions, who might win?

I’m using the assumption that Hugo voters would vote for Best Saga like they vote for Best Novel and other categories. Take Connie Willis: she has 24 Hugo nominations and 11 wins. I figure the first time she’s up for a Best Saga, she’d win. This means that my imagined winners are very much in keeping with Hugo tradition; you may find that unexciting, but I find it hard to believe that Hugo voters would abandon their favorites in a Best Saga category. I went through each year and selected a favorite. Here’s what I came up with as likely/possible winners (likely, not most deserving). I’ve got some explanation below, and it’s certainly easy to flip some of these around or even include other series. Still, this is gives us a rough potential list to see if it’s a worthy a Hugo:

2005: New Crobuzon, China Mieville

2006: A Song of Ice and Fire, George R.R. Martin

2007: ? ? ?

2008: Harry Potter, J.K. Rowling

2009: Old Man’s War, John Scalzi

2010: Discworld, Terry Pratchett

2011: Time Travel, Connie Willis

2012: Zones of Thought, Vernor Vinge

2013: Culture, Iain M. Banks

2014: Wheel of Time, Robert Jordan and Brandon Sanderson

Edit: I originally had Zones of Thought for 2007, having mistaken Rainbow’s End as part of that series. Vinge is certainly popular enough to win, so he bumped out my projected The Laundry Files win for Stross in 2012. That opened up 2007, and I don’t know what to put in that slot. Riverside by Ellen Kushner? That might not be long enough. Malazan? But that’s never gotten any Hugo play. If you have any suggestions, let me know. It’s interesting that there are some more “open” years where no huge works in a big mainstream series came out.

Notes: I gave Mieville and Scalzi early wins in their careers based on how Hugo-hot they were at the time. Mieville had scored 3 Hugo nominations in a row for his New Crobuzon novels by 2005, and there weren’t any other really big series published in 2004 for the 2005 Hugo. Scalzi was at the height of his Hugo influence around 2009, having racked up 6 nominations and 2 wins in the 2006-2009 period. These authors were such Hugo favorites I find it hard to believe they wouldn’t be competitive in a Best Saga category.

Rowling may seem odd, but she did win a Hugo in 2001 for Harry Potter, and these were the most popular fantasy novels of the decade (by far).

I wasn’t able to find space for Bujold, although the Vorkosigan saga would almost surely win a Best Saga Hugo sometime (I gave Banks the edge in 2013 because of his untimely passing; the only other time Bujold was eligible was 2011, and I think Willis is more popular with Hugo voters than Bujold). I gave Jordan the nod in 2014 for the same reason; The Expanse would probably be the closest competition that year.

Analysis: Is this imagined list of winners a good addition to the Hugos? Or does it simply re-reward authors who are already Hugo winners? Of this imagined list, 5 of these 10 authors have won the Best Novel Hugo, although only 3 of them for novels from their Saga (Willis, Vinge, and Rowling had previously won for books from their series; Scalzi and Mieville won for non-series books). 5 are new: Banks, Jordan, Pratchett, my 2007 wild card, and Martin, although Martin had won Best Novella Hugos for his saga works. So we’re honoring 40% new work, 60% repeat work. Eh?

Overall, it seems a decent, if highly conventional, list. Those 9 are probably some of the biggest and best regarded SFF writers of the past decade. A Saga award would probably not be very diverse to start off with; some of the series projected to win have been percolating for 10-20 years. Newer/lesser known authors can’t compete. On some level, the Hugo is a popularity contest. Expecting it to work otherwise is probably asking too much of the award.

I like that Culture and Discworld might sneak in; individual novels from those series never had much of a chance at a Hugo, but I figure they’d be very competitive under a Best Saga. I’ll also add that I think Mieville and Scalzi are at their best in their series, and I’d certainly recommend new readers start with Perdido Street Station or Old Man’s War instead of The City and the City or Redshirts.

It’s interesting to imagine whether or not winning a Best Saga Hugo would have changed the Best Novel results. Would Mieville and Scalzi already having imaginary Best Saga wins have stopped The City and the City or Redshirts from winning? One powerful argument for a Best Saga Hugo is that it would open up the Best Novel category (and even Best Novella) a little more. Even someone like Willis might win in Best Saga instead of Best Novel. On the other side, people might just vote for the same books in Best Novel as in Best Saga, which would make for a pretty dull Hugos.

One interesting thing about this list, particularly if you weren’t able to win the Best Saga award twice, is that it would leave future Best Saga awards pretty wide open. You’d have The Expanse due for a win, and the Vorkosigan Saga, and the Laundry Files, maybe C.J. Cherryh’s Foreigner, probably Brandon Sanderson’s Stormlight/Cosmere, maybe Dresden Files, and then maybe Ann Leckie depending on how she extends the Imperial Radch trilogy/series, but after that—the category would really open up.

That’s what I think would happen with a Best Saga Hugo: it would be very conventional for 5-10 years as fandom clears out the consensus major series, and after that it would get more competitive, more dynamic, and more interesting. Think of it as an award investing in the Hugo future: catching up with the past at first, but then making more room in the Best Novel, Best Novella, and even Best Saga categories for other voices. Of course, that’s a best case scenario . . .

So is this a worthy set of ten imagined winners? A disappointing set? A blah set? I’ll add that, as a SFF fan, I’ve read at least one book from each of those series; I’d be interested to know how man SFF fans would be prepared to vote in a Best Saga category, or whether we’d be swamped with a new set of reading. I also feel like having read one or two books from a series gives me a good enough sense to know whether I like it or not, and thus whether I’d vote for it or not. Others might feel different.

Nebula/Hugo Convergence 2010-2014: A Chaos Horizon Report

Time for a quick study on Hugo/Nebula convergence. The Nebula nominations came out about a week ago: how much will those nominations impact the Hugos?

In recent years, quite a bit. Ever since the Nebulas shifted their rules around in 2009 (moving from rolling eligibility to calendar year eligibility; see below), the Nebula Best Novel winner usually goes on to win the Hugo Best Novel. Since 2010, this has happened 4 out of 5 times (with Ancillary Justice, Among Others, Blackout/All Clear, and The Windup Girl, although Bacigalupi did tie with Mieville). That’s a whopping 80% convergence rate. Will that continue? Do the Nebulas and Hugos always converge? How much of a problem is such a tight correspondence between the two awards?

The Hugos have always influenced the Nebulas, and vice versa. The two awards have a tendency to duplicate each other, and there’s a variety of reasons for that: the voting pools aren’t mutually exclusive (many SFWA members attend WorldCon, for instance), the two voting pools are influenced by the same set of factors (reviews, critical and popular buzz, etc.), and the two voting pools have similar tastes in SFF. Think of how much attention a shortlist brings to those novels. Once a book shows up on the Nebula or Hugo slates, plenty of readers (and voters) pick it up. In the nearly 50 years when both the Hugo and Nebula has been given, the same novel has won the award 23 out of 49 times, for a robust 47% convergence. As we’ll see below, this has varied greatly by decade: in some decades (the 1970s, the 2010s) the winner are basically identical. In other decades, such as the 1990s, there’s only a 20% overlap.

All of this is made more complex by which award goes first. Historically, the Hugo used to go first, often awarding books a Hugo some six months before the Nebula was award. Thanks to the Science Fiction Awards Database, we can find out that Paladin of Souls received its Hugo on September 4, 2004; Bujold’s novel received its Nebula on April 30, 2005. Did six months post-Hugo hype seal the Nebula win for Bujold?

Bujold benefitted from the strange and now defunct Nebula rule of rolling eligibility. The Locus Index to SF Awards gave us some insight on how the Nebula used to be out of sync with the Hugo:

The Nebulas’ 12-month eligibility period has the effect of delaying recognition of many works until nearly 2 years after publication, and throws Nebula results out of synch with other awards (Hugo, Locus) voted in a given calendar year. (NOTE – this issue will pass with new voting rules announced in early 2009; see above.)

The rule change went through in early 2009:

SFWA has announced significant rules changes for the Nebula Awards process, eliminating rolling eligibility and limiting nominations to work published during a given calendar year (i.e., only works published in 2009 will be eligible for the 2010 awards), as well as eliminating jury additions. The changes are effective as of January 2009 and “except as explicitly stated, will have no impact on works published in 2008 or the Nebula Awards process currently underway.”

Since 2009, eligibility has been straightened out: Hugo and Nebula eligibility basically follow the same rules, and now it is the Nebula that goes first. The Nebula tends to announce a slate in late February, and then gives the award in early May. The Hugo announced a slate in mid April, and then awards in late August/early September, although those dates change very year.

Tl;dr: while it used to be the Hugos that influenced the Nebula, but, since 2010, it is now the Nebulas that influence the Hugos. We know that Nebula slates tend to come out while Hugo slate voting is still going on. This means that Hugo voters have a chance to wait until the Nebulas announce their nominations, and then adjust/supplement their voting as they wish. This year, there were about 3 weeks between the Nebula announcement and the close of Hugo voting: were WorldCon voters scrambling to read Annihilation and The Three-Body Problem in that gap? Remember, even a slight influence on WorldCon voters can drastically change the final slate.

But how much? Let’s take a look at the data from 2010-2014, or the post-rule change era. That’s not a huge data set, but the results are telling.

Chart 1: Hugo/Nebula Convergence in the Best Novel Categories, 2010-2014

This chart shows how many of the Nebula nominations showed up on the Hugo ballot a few weeks later. You can see the it makes for around 40% on average. Don’t get fooled by the 2014 data: Neil Gaiman’s The Ocean at the End of the Lane made both the Nebula and Hugo slate, but Gaiman declined his Hugo nomination. If we factored him in, we’d be staring at that same 40% across the board.

40% isn’t that jarring, since that only means 2 out of the 5 Hugo nominees. If we consider the overlap between reading audiences, critical and popular acclaim, etc., that doesn’t seem too far out of line.

It’s the last column that catches my eye: 4/5 joint winners, or 80% joint winners in the last 5 years. Only John Scalzi managed to eek out a win over Kim Stanley Robinson, otherwise we’d be batting 100%. We should also keep in mind the tie between The City and the City and The Windup Girl in 2010.

Nonetheless, my research shows that the single biggest indicator of winning a Hugo from 2010-2014 is whether or not you won the Nebula that year. Is this a timeline issue: does the Nebula winner get such a signal boost on the internet in May that everyone reads it in time for the Hugo in August? Or are the Hugo/Nebula voting pools converging to the point that their tastes are almost the same? Were the four joint-winners in the 2010s so clearly the best novels of the year that all of this is moot? Or is this simply a statistical anomaly?

I’m keeping close eye on this trend. If Annihilation sweeps the Nebula and Hugos this year, the SFF world might need to take step back and ask if we want the two “biggest” awards in the field to move in lockstep. This has happened in the past. Let’s take a look at the trends of Hugo/Nebula convergence by decade in the field:

That’s an odd chart for you: the 1960s (only 4 years, though) had 25% joint winners, the 1970s jumped to 80%, we declined through the 1980s (50%) and the 1990s (20%), stayed basically flat in the 2000s (30%), and then jumped back up to 80% in the 2010s. Why so much agreement in the 1970s and 2010s with so much disagreement in the 1990s and 2000s? The single biggest thing that changed from the 2000s to the 2010s were the Nebula rules: is that the sole cause of present day convergence?

I don’t have a lot of conclusions to draw for you today. I think convergence is a very interesting (and complex) phenomenon, and I’m not sure how I feel about it. Should the Hugos and Nebulas go to different books? Should they only converge for books of unusual and universal acclaim? In terms of my own predictions, I expect the trend of convergence to continue: I think 2-3 of this year’s Nebula nominees will be on the Hugo ballot. If I had to guess, I’d bet that this year’s Nebula winner will also take the Hugo. Given this data, you’d be foolish to do anything else.

2014 Locus Recommended Reading List

There’s a wealth of information there, including recommendations for categories that I don’t have the time to follow, like YA Novel, Novella, Novelette, and Short Story. In the past, most of the future Hugo and Nebula nominees have shown up on these lists. Part of that is because the lists are so long (20-30 suggestions each), but also because Locus pretty closely mirrors the sentiments of the SFWA and the Nebula.

Here’s there SF and Fantasy lists:

Novels – Science Fiction

•Ultima, Stephen Baxter (Gollancz; Roc 2015)

•War Dogs, Greg Bear (Orbit US; Gollancz)

•Shipstar, Gregory Benford & Larry Niven (Tor; Titan 2015)

•Chimpanzee, Darin Bradley (Underland)

•Cibola Burn, James S.A. Corey (Orbit US; Orbit UK)

•The Book of Strange New Things, Michel Faber (Hogarth; Canongate)

•The Peripheral, William Gibson (Putnam; Viking UK)

•Afterparty, Daryl Gregory (Tor; Titan)

•Work Done for Hire, Joe Haldeman (Ace)

•Tigerman, Nick Harkaway (Knopf; Heinemann 2015)

•Europe in Autumn, Dave Hutchinson (Solaris US; Solaris UK)

•Wolves, Simon Ings (Gollancz)

•Ancillary Sword, Ann Leckie (Orbit US; Orbit UK)

•Artemis Awakening, Jane Lindskold (Tor)

•The Three-Body Problem, Cixin Liu (Tor)

•The Causal Angel, Hannu Rajaniemi (Tor; Gollancz)

•The Memory of Sky, Robert Reed (Prime)

•Bête, Adam Roberts (Gollancz)

•Lock In, John Scalzi (Tor; Gollancz)

•The Blood of Angels, Johanna Sinisalo (Peter Owens)

•The Bone Clocks, David Mitchell (Random House; Sceptre)

•Lagoon, Nnedi Okorafor (Hodder; Saga 2015)

•All Those Vanished Engines, Paul Park (Tor)

•Annihilation/Authority/Acceptance, Jeff VanderMeer (FSG Originals; Fourth Estate; HarperCollins Canada)

•Dark Lightning, John Varley (Ace)

•My Real Children, Jo Walton (Tor; Corsair)

•Echopraxia, Peter Watts (Tor; Head of Zeus 2015)

•World of Trouble, Ben H. Winters (Quirk)

Novels – Fantasy

•The Widow’s House, Daniel Abraham (Orbit US; Orbit UK)

•The Goblin Emperor, Katherine Addison (Tor)

•Steles of the Sky, Elizabeth Bear (Tor)

•City of Stairs, Robert Jackson Bennett (Broadway; Jo Fletcher)

•Hawk, Steven Brust (Tor)

•The Boy Who Drew Monsters, Keith Donohue (Picador USA)

•Bathing the Lion, Jonathan Carroll (St. Martin’s)

•Full Fathom Five, Max Gladstone (Tor)

•The Winter Boy, Sally Wiener Grotta (Pixel Hall)

•The Magician’s Land, Lev Grossman (Viking; Arrow 2015)

•Truth and Fear, Peter Higgins (Orbit; Gollancz)

•The Mirror Empire, Kameron Hurley (Angry Robot US)

•Resurrections, Roz Kaveney (Plus One)

•Revival, Stephen King (Scribner; Hodder & Stoughton)

•The Dark Defiles, Richard K. Morgan (Del Rey; Gollancz)

•The Bees, Laline Paull (Ecco; Fourth Estate 2015)

•The Godless, Ben Peek (Thomas Dunne; Tor UK)

•Heirs of Grace, Tim Pratt (47North)

•Beautiful Blood, Lucius Shepard (Subterranean)

•A Man Lies Dreaming, Lavie Tidhar (Hodder & Stoughton)

•The Girls at the Kingfisher Club, Genevieve Valentine (Atria)

•California Bones, Greg van Eekhout (Tor)

Like I said, pretty comprehensive. Most of the major candidates are there, ranging from VanderMeer to Leckie to Addison to Bennett. Here are the snubs I noticed:

The Martian, Andy Weir: That’s a good indication that the “industry” doesn’t consider this a 2014 book.

Station Eleven, Emily St. John Mandel: A surprise. Maybe it caught fire too late in the year to make the list?

The First Fifteen Lives of Harry August, Clair North

Most mainstream fantasy novels: no Words of Radiance, no The Broken Eye, no Fool’s Assassin, no Prince of Fools, no The Emperor’s Blade’s, no The Slow Regard of Silent Things. It says something when you put together a list of 22 fantasy novels and leave out most of the fantasy best-sellers. Is Locus arguing that excellence can’t be achieved in mainstream epic fantasy? Or are they reflecting their audience’s lack of interest in epic series? Sure, there are a few fantasy series on the list—Robert Morgan, Elizabeth Bear, Lev Grossman, Kameron Hurley—but each of those is set up, on some level, as a challenge to more conventional epic fantasy.

There are several books that haven’t gotten an official US publication yet (or least they aren’t available on Amazon): Lagoon, A Man Lies Dreaming, Bete, and Wolves. You’d think publication would be truly international in 2014, but that’s not yet the case. Lagoon, in particular, would have had a Nebula and Hugo shot if had gotten a US publication. Without one, it’s probably not eligible for the Nebula, and thus can’t build momentum towards a Hugo.

Lastly, is The Bone Clocks really science fiction? I guess part of the novel takes place in the future, so that’s probably why they placed it in that category. It felt more like a horror/weird fiction/fantasy hybrid to me, but I guess classification doesn’t matter that much in the end.

I’ve been waiting for this list; now that we have it, I’ll update and finalize the Critics Meta-List.

Hugo Contenders and Popularity, January 2015

It’s the end of the month, so time to check in on the popularity of the leading Hugo and Nebula contenders!

To do this, I use Goodreads numbers. We don’t have a lot of reliable ways to measure sales in the SFF field. Publishers tend to keep their numbers secret, so we’re left having to estimate via the number of Goodreads or Amazon ratings. Since Amazon bought Goodreads in 2013, the Amazon and Goodreads dating has been converging.

I prefer Goodreads because it has a larger sample size. Goodreads is not even close to 100% accurate. My current guess is that it samples 5%-20% of the American readership, and that this varies substantially from author to author. So one author might be sampled at a 20% rate, but another at only 5%. Since Goodreads is now integrated with Amazon kindle, it very much favors books that sell large numbers through Amazon. Authors that sell large numbers of copies through physical outlets, such as Stephen King, do much worse in these numbers. Goodreads also slants decidedly young in its demographic, with all the various statistical issues that brings. So take these with a grain of salt, and they’re better for comparison purposes than for absolutes. Look for differences in order of magnitude, not fine-grain differences such as whether one book has 500 more readers than another.

What I’m looking for is a general since of which novels are “hot” and which are selling more slowly. Since this is the first year of Chaos Horizon, we don’t know how predictive sales are for the awards; I don’t think there’s a simple correlation like “more sales = greater chances of winning.” However, there is probably some sort of “sales floor” we can discover. Without moving some copies, you’re not popular enough to get nominated or win. I also think picking up lots of readers in January has got to help your Hugo/Nebula chances; the fresher a book is in the mind, the better chance of voting for it.

Here’s the full Excel data; it’s getting too bulky to present here on WordPress: Hugo Metrics. I’ve got data going back to October. Methodology: I record the # of Goodreads rating on the last day of the month.

Table 1: Goodreads Popularity for Selected Hugo and Nebula Contenders

I’m still blown away by how well The Martian is doing. According to Goodreads, more people read The Martian last month than the bottom 15 books on my list combined. That’s sales power! If The Martian proves to be eligible for the 2015 Hugo, it’ll be a formidable competitor. It would be very interesting to see how such a “mainstream” SF hit does against a more literary SFF novel like Annihilation.

Mandel’s Station Eleven is also on fire, putting up a huge 10,000 reader month. I think that number solidifies Mandel’s Nebula chances, and if she grabs a Nebula nomination, she could make a run at a Hugo nomination. I added Skin Game to the list for this month, to get a look at how a popular mainstream urban fantasy novel does against it’s science fiction and fantasy brethren. Very well, it turns out.

Everyone else is sort of floating along. A lot of books have finished up their hardcover runs, and are waiting for their paperbacks to come along and revitalize sales. If I had a book out, I’d want my paperback to come out in January: look at those huge reader numbers for this month. People must be spending their Holiday gift cards! Frontline possibilities like Annihilation are selling well enough to still be in the mix. I’d point to books like City of Stairs, The Goblin Emperor, and The Mirror Empire, which are all languishing at the bottom of my list. They’ve been doing well on Year-End lists, but they don’t have the sales to match that critical enthusiasm. Here’s a chart showing momentum over the past 3 months:

Table 2: Goodreads Momentum for Selected Hugo and Nebula Contenders

I’m surprised that January did better than December. People must have been too busy with Christmas too read! It’s interesting to see something like The Martian build momentum over the last 3 months; word of mouth really is paying off for a book like that. In contrast, something like Ancillary Sword is basically flat over those same three months. I’m interested to see if such momentum is predictive or not.

Check out previous posts in this series to see the evolution of this data: December, November, October.

How Many Nominating Ballots for the 2015 Hugo?

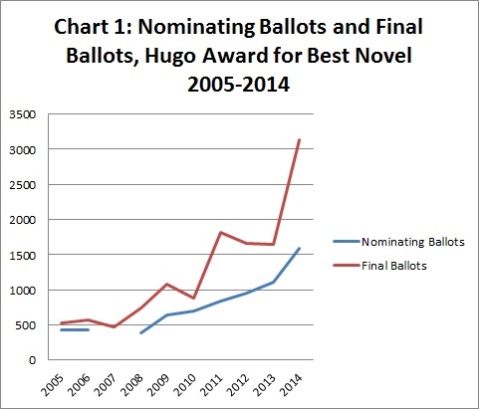

The turnout for the 2014 Hugo Award was unprecedented, with a huge jump in both nominating and final ballots. There are several reasons for that jump: London was a bigger than normal WorldCon city, and that certainly brought in more fans. Spokane in 2015 isn’t going to come close. The 2014 Hugo debate was also unusually vehement, with Larry Correia’s Sad Puppy campaign and the Wheel of Time‘s “whole series deserves a nomination” campaign drawing in an unusual number of supporters and protesters. All of that led to a near-doubling of the final Hugo Best Novel vote, from 1649 final Best Novel ballots in 2013 to 3137 Best Novel ballots in 2014.

That leaves a huge question open: how is that record turnout going to impact 2015? Remember, everyone who voted in 2014 is eligible to nominate in 2015. That’s a real oddity of the award, and it’s part of the reason the Hugo has a very repetitive flavor. Just consider: if you voted one book as best novel in 2014, aren’t you more likely to vote that same author again in 2015? Here’s the WorldCon rules:

3.7.1: The Worldcon Committee shall conduct a poll to select the nominees for the final Award voting. Each member of the administering Worldcon, the immediately preceding Worldcon, or the immediately following Worldcon as of January 31 of the current calendar year shall be allowed to make up to five (5) equally weighted nominations in every category.

While not everyone from LonCon3 will participate, the potential pool of nominators for 2015 is huge. Let’s do what Chaos Horizon does and look at some stats. First up, I data mined the Hugo Award website to come up with the number of nominating and final ballots for the Hugo Award over the past 10 years:

Table 1: Number of Nominating and Final Ballots for the Best Novel Hugo, 2005-2015

Frustratingly, I couldn’t find any data for the number of nominating ballots in 2007. The .pdf of that info only gives the number of nomination per book, not the total number of Best Novel ballots. If anyone has that data point, I’d love to patch up the chart.

Carryover is my awkward term for the ratio between Next Year’s Nominating Ballots divided by Previous Year’s Final Ballots. Since the last year’s Final Balloteers can vote in the next award, this ratio is what we need to predict the number of voters for 2015. Of course, not every voter from the past year will vote, and you can see a definite perturbation in the line based on location as well. Nonetheless, if we average those out, we can see that the number of nominees works out to be around 78% of the previous year’s final vote. Let’s just call it 75% for a ballpark. Imprecise, I know, but this will give us a start.

And visually:

That chart really shows how unusual 2014 was, but the general trend has definitely been towards more Hugo voters and more Hugo nominators.

What does that mean for this year’s award? Well, let’s tackle it from a couple of angles:

1. If we accept that 75% number, that would mean roughly 2350 nominating ballots for 2015. That seems huge, but even if you went with only 50% carryover, we’d have 1580, which is almost the biggest ever. If we land on a middle-ground number like 2000, that’s means each novel will need around 200 votes (10%) to make it into the final Hugo slate.

2. Each of the 2014 Hugo nominees (Leckie, Correia, Stross, Grant, Sanderson) has a sizeable built-in Hugo advantage for 2015. Let’s zoom in on Correia as an example: last year, 332 people voted Warbound as the Best Novel of 2014. If 75% of those nominate Correia again—as they’re fully eligible to do—that would likely get him into this year’s field. Sanderson’s case is even more interesting: 658 voters placed Wheel of Time in the #1 spot on their final 2014 ballots. Now, Sanderson isn’t Robert Jordan, but what percentage of those are going to support Words of Raidance? If we use that 200 bar I estimated above, that means Sanderson needs to keep only 30% of the WOT vote to make the slate. Doable? I don’t know.

3. A bigger pool of nominators might make it harder for lesser known authors to get into the 2015 field. In 2013, it only took Saladin Ahmed 118 votes to sneak into the final slate. In 2014, it took Mira Grant 98. That number could double for 2015. You’ll need either broad or passionate support to make the 2015 slate, something more niche novels might not be able to muster. It’s easy to imagine a 2015 scenario where Leckie keeps her vote, Correia keeps his vote, and Sanderson grabs a sizeable percentage of The Wheel of Time vote. That’s 3 spots already. Stross could keep his vote, or it could slide over to Scalzi (all S authors are interchangeable, right?). Grant is the borderline case. She was the lowest nominator and final ballot recipient of the bunch last year, and thus the most likely to drop off. In that scenario, the entire rest of the SFF world is fighting for one open Hugo spot.

One thing that makes the Hugo unusual—and interesting—are some of the oddities of its nominating and balloting practices. How is 2015 going to play out? Are we looking at a record turnout? And does a record turnout mean record repetition? Stay tuned . . . for more Chaos! (Insert laughter and more terrible jokes).

Literary Fiction and the Hugo and Nebula Awards for Best Novel, 2001-2014

A sub-category of my broader genre study, this post addresses the increasing influence of “literary fiction” on the contemporary Hugo and Nebula Awards for Best Novel, 2001-2014. I think the general perception is that the awards, particularly the Nebula, have begun nominating novels that include minimal speculative elements. Rather than simply trust the general perception, let’s look to see if this assumption lines up with the data.

Methodology: I looked at the Hugo and Nebula nominees from 2001-2014 and ranked the books as either primarily “speculative” or “literary.” Simple enough, right?

Defining “literary” is a substantial and significant problem. While most readers would likely acknowledge that Cloud Atlas is a fundamentally different book than Rendezvous with Rama, articulating that difference in a consistent manner is complicated. The Hugos and Nebulas offer no help themselves. Their by-laws are written in an incredibly vague fashion that does not define what “Science Fiction or Fantasy” actually means. Here’s the Hugo’s definition:

Unless otherwise specified, Hugo Awards are given for work in the field of science fiction or fantasy appearing for the first time during the previous calendar year.

Without a clear definition of “science fiction or fantasy,” it’s left up to WorldCon or SFWA voters to set genre parameters, and they are free to do so in any way they wish.

All well and interesting, but that doesn’t help me categorize texts. I see three types of literary fiction entering into the awards:

1. Books by literary fiction authors (defined as having achieved fame before their Hugo/Nebula nominated book in the literary fiction space) that use speculative elements. Examples: Cloud Atlas, The Yiddish Policeman’s Union.

2. Books by authors in SFF-adjacent fields (primarily horror and weird fiction) that have moved into the Hugo/Nebulas. These books often allow readers to see the “horror” elements as either being real or imagined. Examples: The Drowning Girl, Perfect Circle, The Girl in the Glass.

3. Books by already well-known SFF authors who are utilizing the techniques/styles more commonplace to literary fiction. Examples: We Are All Completely Besides Ourselves, Among Others.

That’s a broad set of different texts. To cover all those texts—remember, at any point you may push back against my methodology—I came up with a broad definition:

I will classify a book as “literary” if a reader could pick the book up, read a random 50 page section, and not notice any clear “speculative” (i.e. non-realistic) elements.

That’s not perfect, but there’s no authority we can appeal to make these classifications for us. Let’s see how it works:

Try applying this to Cloud Atlas. Mitchell’s novel consists of a series of entirely realistic novellas set throughout various ages of history and one speculative novella set in the future. If you just picked the book up and started reading, chances are you’d land in one of the realistic sections, and you wouldn’t know it could be considered a SFF book.

Consider We Are All Completely Beside Ourselves, Karen Joy Fowler’s reach meditation on science, childhood, and memory. Told in realistic fashion, it follows the story of a young woman whose parents raised a chimpanzee alongside her, and how this early childhood relationship shapes her college years. While this isn’t the place to decide if Fowler deserved a Nebula nomination—she won the National Book Award and was nominated for the Booker for this same book, so quality isn’t much of a question—the styles, techniques, and focus of Fowler’s book are intensely realistic. Unless you’re told it could be considered a SF novel, you’d likely consider it plain old realistic fiction.

With this admittedly imperfect definition in place, I went through the nominees. For the Nebula, I counted 13 out of 87 nominees (15%) that met my definition of “literary.” While a different statistician would classify books differently, I imagine most of us would be in the same ball park. I struggled with The City & The City, which takes place in a fictional dual-city and that utilizes a noir plot; I eventually saw it as being more Pychonesque than speculative, so I counted it as “literary.” I placed The Yiddish Policeman’s Union as literary fiction because of Chabon’s earlier fame as a literary author. After he establishes the “Jews in Alaska” premise, large portions of the book are straightly realistic. Other books could be read either as speculative or not, such as The Drowning Girl. Borderline cases all went into the “literary” category for this study.

Given that I like the Chabon and Mieville novels a great deal, I’ll emphasize I don’t think being “literary” is a problem. Since these kinds of books are not forbidden by the Hugo/Nebula by-laws, they are fair game to nominate. These books certainly change the nature of the award, and there are real inconsistencies—no Haruki Murakami nominations, no The Road nomination—in which literary SFF books get nominated.

As for the Hugos, only 4 out of 72 nominees met my “literary” definition. Since the list is small, let me name them here: The Years of Rice and Salt (Robinson’s realistically told alternative history), The Yiddish Policeman’s Union, The City & The City, and Among Others. Each of those pushes the genre definitions of speculative fiction. Two are flat out alternative histories, which has traditionally been considered a SFF category, although I think the techniques used by Robinson and Chabon are very reminiscent of literary fiction. Mieville is an experimental book, and the Walton is a book as much “about SFF” as SFF. I’d note that 3 of those 4 (all but the Robinson) received Nebula nominations first, and that Nebula noms have a huge influence on the Hugo noms.

Let’s look at this visually:

Even with my relatively generous definition of “literary,” that’s not a huge encroachment. Roughly 1 in 6 of the Nebula noms have been from the literary borderlands, which is lower than what I’d expected. While 2014 had 3 such novels (the Folwer, Hild, and The Golem and the Jinni), the rest of the 2010s had about 1 borderline novel a year.

The Hugos have been much less receptive to these borderline texts, usually only nominating once the Nebula awards have done. We should note that both Chabon and Walton won, once again reflecting the results of the Nebula.

So what can we make of this? The Nebula nominates “literary” books about 1/6 times, or once per year. The Hugo does this much more infrequently, and usually when a book catches fire in the Nebula process. While this represent a change in the awards, particularly the Nebula, this is nowhere as rapid or significant as the changes regarding fantasy (which are around 50% Nebula and 30% Hugo). I know some readers think “literary” stories are creeping into the short story categories; I’m not an expert on those categories, so I can’t meaningfully comment.

I’m going to use the 15% Nebula and 5% Hugo “literary” number to help shape my predictions. I may have been overestimating the receptiveness of the Nebula to literary fiction; this study suggests we’d see either Mitchell or Mandel in 2015, not both. Here’s the full list of categorizations. I placed a 1 by a text if it met the “literary” definition: Lit Fic Study.

Questions? Comments?

Best of 2014: Strange Horizons

Now that I’ve put up the Mainstream Best of 2014 Meta-List, I can move on to the far more interesting (and predictive) SFF Critics Meta-List. I’m starting today with Strange Horizons, because their “Best of 2014” list causes on immediate methodological crisis. Thanks, Niall!

Like many of these posts from bigger publications, Strange Horizons is a meta-list unto itself, including short paragraphs highlighting the “Best of 2014” from 18 different critics. These critics represent a large range of important voices in the field, including Hugo nominated authors and fan writers. Of course, Strange Horizons was itself a Hugo nominee for semiprozine (whatever that means) in 2013 and 2014, and is thus likely to carry a fair amount of weight with Hugo voters.

All good so far, and this is exactly what I’m looking for in a predictive list. I figure we collate this list against other similar lists, and we’ll have another indicator of likely Hugo/Nebula nominees and winners. I then collate the indicators, and bam!, I have my predictive model.

My processing practice so far has been to read through the lists and every time a critic mentions a book as a “Best of 2014” (honorable mentions don’t count), to give it 1 point. Simple, or so I thought. In my previous SFF Critics Meta-List collation, I let each mention count for one vote. Thus, since 3 critics from Tor.com’s list mentioned The Goblin Emperor, it got 3 votes. This helped Goblin Emperor win the first collation.

That multiple votes per list is becoming a problem. Here’s the Strange Horizon list (absent Adam Robert’s choices, since I already collated them from The Guardian article he wrote, and I didn’t want his choices to count twice):

5 mentions: Annihilation/Southern Reach, VanderMeer, Jeff

2 mentions: J, Jacobson, Howard

2 mentions: The Race, Allan, Nina

2 mentions: Fire in the Unnamable Country, Islam, Ghalib

Everyone else got 1 mention each:

Europe in Autumn, Hutchinson, David

All those Vanished Engines, Park, Paul

Boy, Snow, Bird, Oyeyemi, Helen

Steles of the Sky, Bear, Elizabeth

Ancillary Sword, Leckie, Ann

Broken Monsters, Beukes, Lauren

The Bone Clocks, Mitchell, David

The Wake, Kingsnorth, Paul

Of Things Gone Astray, Matthewson, Janina

The Causal Angel, Rajaniemi, Hannu

Wolf in White Van, Darnielle, John

The Strange and Beautiful Sorrows of Ava Lavender, Walton, Leslye

The Angel of Losses, Feldman, Stephanie

The Department of Speculation, Offill, Jenny

Tigerman, Harkaway, Nick

The Girl in the Road, Byrne, Monica

Nigerians in Space, Olukotun, Deji Bryce

A good list, broad and deep, with mentions of plenty of the front-runners for the Nebula and Hugo. But can I really give Annihilation 5 points from one list? Clearly, VanderMeer won the Strange Horizons betting pool, but how much influence can I give one publication? If I collate 5 votes, that means Strange Horizons will dominate my meta-list. Not cool. On the other hand, anyone who reads this Best of 2014 is likely to come away with the feeling they better read Annihilation, so is it fair to give it only 1 point? Does that accurately reflect the intent/effect of the article?

Like I said, methodological crisis. Our only option: panic!

Fortunately, Chaos Horizon is just for fun. I’m putting together a list that may or may not predict the Hugos and Nebulas, and, even when I do, we’re only looking at a few hundred data points, not enough to be statistically sound. For the time being, I’m going to give a list like Strange Horizons (and Tor.com, and SF Signal) a maximum of 2 points. Everyone who appears at least once, gets 1 point. That final point will be scaled against the multiple mentions. So the top of the Strange Horizons lists will look like this in the collation:

2 points: Annihilation/Southern Reach, VanderMeer, Jeff

1.25 points: J, Jacobson, Howard

1.25 points: The Race, Allan, Nina

1.25 points: Fire in the Unnamable Country, Islam, Ghalib

So, beyond the initial mentions, VanderMeer got 4 more mentions. 4/4 = 1. Howard got 1 more mention, 1/4 = .25. What do you think? Fair? Unfair?

I’ll be back tomorrow with SF Signal’s list and some more comments on the methodology for the SFF Critics Meta-List, and then I’ll recollate the list. Things are heating up this award season, so it’ll be interesting to see who pulls ahead with my evolving SFF Critics list methodology.

Blank Data Sets for the Hugo and Nebula Awards for Best Novel

I’m doing some organizing work here at Chaos Horizon, so let me put up something I’ve been meaning to for a while: blank data sets for the Hugo and Nebula Awards for Best Novel, from the beginnings to the present. These are Excel formatted lists of the Hugo and Nebula winners + nominees, sorted by year and author. It was a pain to put these together, but now that they’re cleanly formatted I wanted to share them with the community.

So long story short, anyone who wants to do their own statistical study of the Hugo and Nebulas is free to use my worksheets. Excel is a powerful tool, and given the relatively small size of the data sets—311 Nebula nominees, 288 Hugo nominees—it isn’t too hard to use. With only a little amount of work—and data entry—you can be generating your own tables and graphs in no time. I’m also somewhat confident Google Docs can work with these, although I never use Google Docs myself.

The guiding principles of Chaos Horizon have always been neutrality and methodological/data transparency. Statistics are at their most meaningful when multiple statisticians are working on the same data sets. There’s lot of information to be sorted through, and I look forward to other what statisticians will find. If you do a study, drop me an e-mail at chaoshorizon42@gmail.com or link in the comments.

Here’s the Excel File: Blank Hugo and Nebula Data Set. I’ll also perma-link this post under “Resources.”

Have fun!

The Hugo and Nebula Awards and Genre, Part 7

We’re knee deep in these awards now. Yesterday, I looked at whether or not it makes sense to break the Nebula (2001-2014) down into sub-genres (secondary/fantasy, contemporary/historical, epic series/stand-alone). Today, we’ll apply that same methodology to the Hugo, so you might want to take a look at Part 6 to refresh your memory on the methodology.

By my count, there were 20 fantasy novels nominated for the Hugo between 2001-2014. Here’s the primary/secondary breakdown for the nominees:

A reasonable split, and this reflects what I’d expect. Secondary world fantasies, particularly epic series, are a little more populist/mass-market, and the Hugo is usually more receptive to those kinds of books. The secondary world novels are clustered around well-known authors: 3 Martin novels, 4 Mieville novels, 2 Bujold, and then books by Jemisin, Ahmed, and Jordan/Sanderson. The primary world novels show a better range of authors: Gaiman has 2, but 6 other authors have one each, headlined by Rowling and Walton. Now, with that 60/40 break, you’d expect secondary world novels to do well in the winner’s circle. The stats show the opposite is true:

There have been seven fantasy winners from 2001-2014, and primary world novels have dominated: Rowling, Gaiman (twice), Clarke, and Walton. Only Mieville and Bujold have grabbed wins for secondary world novels. That’s quite a flip in from Chart 9 to Chart 10. While the data set is small, we should acknowledge that the Hugo voters are willing to put secondary world fantasy on the slate, but haven’t voted it into the winner’s circle very often. The City and the City is definitely a genre-boundary pushing book, and Bujold probably grabbed her win on the strength of her prior Hugo reputation (she’d already won twice before Paladin of Souls). Despite the enormous popularity of secondary world fantasy, it’s not a sub-genre that wins the Hugo (or the Nebula, for that matter). Is that destined to change?

This, for me, is the “tipping point” of the modern Hugo. When will a book like A Game of Thrones win? Is Martin destined for a win once Winds of Winter comes out? Or will another author break this epic fantasy “glass ceiling”? In terms of raw popularity, a book like Words of Radiance trounces most fantasy and SF competitors, but the bias against a book like that is likely to prevent Sanderson from winning (or even being nominated). As fantasy becomes more popular, though, will this bias hold up?

Let’s break this down into sub-genres:

A fairly even division, although “stand alone secondary world fantasy” is propped up by Mieville’s 4 nominations in that sub-genre. The winners list tells a different story: the “epic series” wedge drops out entirely.

It’s these kind of statistical oddities I find fascinating. If you asked most people to define fantasy, the “epic series” idea would pop up very quickly. Probably Tolkein first, then Martin, and then on through the entire range of contemporary fantasy: Robin Hobb, Patrick Rothfuss, N.K. Jemisin, Brandon Sanderson, Elizabeth Bear, Saladin Ahmed, Mark Lawrence, Brent Weeks, and on and on and on. So many well-known (and well-selling) writers are working in this field, and yet the Hugo has never been awarded to this kind of text. The closest you get is Paladin of Souls. Admittedly, the Bujold is pretty close, but her epic Chalion trilogy is clearly three stand-alone texts linked by a shared world.

There’s a tension here that will likely be resolved in the next 10 or so years. Can the Hugo continue to ignore the fantasy series? Is it offering a true survey/accounting of the SFF field without it?

I’m going to take a few days break from the genre study, and then wrap this up by looking at the idea of literary fiction in the Hugos and Nebulas.