A Best Saga Hugo: An Imagined Winner’s List, 2005-2014

The proposed Best Saga Hugo has been generating a lot of debate over the past few days. Check this MetaFilter round-up for plenty of opinions. I find it hard to think of awards in the abstract, so I’ve been generating potential lists of nominees. Today, I’ll try doing winners. Check out my Part 1 and Part 2 posts for some models of what might be nominated, based on the Locus Awards and Goodreads.

Today, I’m engaging in a thought experiment. What if the a Best Saga Hugo had begun in 2005? I’ll use the 400,000 already 300,000 word count rule of the proposal (although it might get lowered in the final proposal). You have to publish a volume in your series to be eligible that year. I’m adding one more limiter, that of not winning the Best Saga Hugo twice. Under those conditions, who might win?

I’m using the assumption that Hugo voters would vote for Best Saga like they vote for Best Novel and other categories. Take Connie Willis: she has 24 Hugo nominations and 11 wins. I figure the first time she’s up for a Best Saga, she’d win. This means that my imagined winners are very much in keeping with Hugo tradition; you may find that unexciting, but I find it hard to believe that Hugo voters would abandon their favorites in a Best Saga category. I went through each year and selected a favorite. Here’s what I came up with as likely/possible winners (likely, not most deserving). I’ve got some explanation below, and it’s certainly easy to flip some of these around or even include other series. Still, this is gives us a rough potential list to see if it’s a worthy a Hugo:

2005: New Crobuzon, China Mieville

2006: A Song of Ice and Fire, George R.R. Martin

2007: ? ? ?

2008: Harry Potter, J.K. Rowling

2009: Old Man’s War, John Scalzi

2010: Discworld, Terry Pratchett

2011: Time Travel, Connie Willis

2012: Zones of Thought, Vernor Vinge

2013: Culture, Iain M. Banks

2014: Wheel of Time, Robert Jordan and Brandon Sanderson

Edit: I originally had Zones of Thought for 2007, having mistaken Rainbow’s End as part of that series. Vinge is certainly popular enough to win, so he bumped out my projected The Laundry Files win for Stross in 2012. That opened up 2007, and I don’t know what to put in that slot. Riverside by Ellen Kushner? That might not be long enough. Malazan? But that’s never gotten any Hugo play. If you have any suggestions, let me know. It’s interesting that there are some more “open” years where no huge works in a big mainstream series came out.

Notes: I gave Mieville and Scalzi early wins in their careers based on how Hugo-hot they were at the time. Mieville had scored 3 Hugo nominations in a row for his New Crobuzon novels by 2005, and there weren’t any other really big series published in 2004 for the 2005 Hugo. Scalzi was at the height of his Hugo influence around 2009, having racked up 6 nominations and 2 wins in the 2006-2009 period. These authors were such Hugo favorites I find it hard to believe they wouldn’t be competitive in a Best Saga category.

Rowling may seem odd, but she did win a Hugo in 2001 for Harry Potter, and these were the most popular fantasy novels of the decade (by far).

I wasn’t able to find space for Bujold, although the Vorkosigan saga would almost surely win a Best Saga Hugo sometime (I gave Banks the edge in 2013 because of his untimely passing; the only other time Bujold was eligible was 2011, and I think Willis is more popular with Hugo voters than Bujold). I gave Jordan the nod in 2014 for the same reason; The Expanse would probably be the closest competition that year.

Analysis: Is this imagined list of winners a good addition to the Hugos? Or does it simply re-reward authors who are already Hugo winners? Of this imagined list, 5 of these 10 authors have won the Best Novel Hugo, although only 3 of them for novels from their Saga (Willis, Vinge, and Rowling had previously won for books from their series; Scalzi and Mieville won for non-series books). 5 are new: Banks, Jordan, Pratchett, my 2007 wild card, and Martin, although Martin had won Best Novella Hugos for his saga works. So we’re honoring 40% new work, 60% repeat work. Eh?

Overall, it seems a decent, if highly conventional, list. Those 9 are probably some of the biggest and best regarded SFF writers of the past decade. A Saga award would probably not be very diverse to start off with; some of the series projected to win have been percolating for 10-20 years. Newer/lesser known authors can’t compete. On some level, the Hugo is a popularity contest. Expecting it to work otherwise is probably asking too much of the award.

I like that Culture and Discworld might sneak in; individual novels from those series never had much of a chance at a Hugo, but I figure they’d be very competitive under a Best Saga. I’ll also add that I think Mieville and Scalzi are at their best in their series, and I’d certainly recommend new readers start with Perdido Street Station or Old Man’s War instead of The City and the City or Redshirts.

It’s interesting to imagine whether or not winning a Best Saga Hugo would have changed the Best Novel results. Would Mieville and Scalzi already having imaginary Best Saga wins have stopped The City and the City or Redshirts from winning? One powerful argument for a Best Saga Hugo is that it would open up the Best Novel category (and even Best Novella) a little more. Even someone like Willis might win in Best Saga instead of Best Novel. On the other side, people might just vote for the same books in Best Novel as in Best Saga, which would make for a pretty dull Hugos.

One interesting thing about this list, particularly if you weren’t able to win the Best Saga award twice, is that it would leave future Best Saga awards pretty wide open. You’d have The Expanse due for a win, and the Vorkosigan Saga, and the Laundry Files, maybe C.J. Cherryh’s Foreigner, probably Brandon Sanderson’s Stormlight/Cosmere, maybe Dresden Files, and then maybe Ann Leckie depending on how she extends the Imperial Radch trilogy/series, but after that—the category would really open up.

That’s what I think would happen with a Best Saga Hugo: it would be very conventional for 5-10 years as fandom clears out the consensus major series, and after that it would get more competitive, more dynamic, and more interesting. Think of it as an award investing in the Hugo future: catching up with the past at first, but then making more room in the Best Novel, Best Novella, and even Best Saga categories for other voices. Of course, that’s a best case scenario . . .

So is this a worthy set of ten imagined winners? A disappointing set? A blah set? I’ll add that, as a SFF fan, I’ve read at least one book from each of those series; I’d be interested to know how man SFF fans would be prepared to vote in a Best Saga category, or whether we’d be swamped with a new set of reading. I also feel like having read one or two books from a series gives me a good enough sense to know whether I like it or not, and thus whether I’d vote for it or not. Others might feel different.

Modelling a Best Saga Hugo Award, Part 2

In my last post, I produced a hypothetical Best Saga Hugo model using the Locus Awards lists. In this model, I’ll use the Goodreads Choice Awards. I think this shows what might happen if the Best Saga Hugo were to become a sheer internet popularity contest, focusing on the best-selling and most-read works of the year. The Goodreads Choice is an open internet vote, and draws hundreds of thousands of participants. That’s much bigger than the WorldCon vote, so you would have to predict the WorldCon voters to act differently. I think if you combine this model with the last one you’d get a decent picture of what might actually happen. Consider this the “most extreme” case of what a Best Saga would look like.

Once again, I’m not using the exact rules of the Proposed Best Saga Hugo. I think those rules (400,000 words published) are too restrictive, as some major series don’t necessarily hit that word length. You’d also have the oddity of some series becoming eligible in their second book (like Sanderson’s Stormlight Archive), some eligible in their fourth or fifth book, etc. Instead, I’m using a less restrictive model just to get a sense of what’s out there. My model is: your series is eligible once you published the THIRD novel in your series, and you are eligible again every time you publish a new NOVEL in your series.

If I was actually going to make the Best Saga a real Hugo, I’d probably change “novel” to “volume” to include short story sagas, and I’d probably add a kicker that “if your series has previously won a Best Saga Hugo, you are not eligible to win another.” I’m also a loose idea “shared universe” rather than “continual narrative,” although that could be argued. I’d want the Hugo to be as broad as possible to make it truly competitive; the more you restrict, the less choice voters have.

Either way, this is just a hypothetical to see what is actually out there, and what such an award might look like in a real world situation.

Methodology: The same as last time. Goodreads publishes Top 20 lists of the most popular SF and F novels; I combed through the list and chose the most popular that were part of a series. The Goodreads lists actually publishes vote totals, so I used those to determine overall popularity. Here’s the 2013 Goodreads Choice Awards; note that these would be the books elgible for the 2014 Hugo. The Goodreads categories are a little wonky at times. Keep that in mind. They also separated out Paranormal Fantasy until 2014, so no Dresden Files or Sookie Sackhouse in the model.

2014:

Oryx and Crake, Margaret Atwood (MaddAddam, SF #1, 16,481 votes)

Silo, Hugh Howey (Dust, SF #2, 13,802 votes)

Wheel of Time, Robert Jordan and Brandon Sanderson (A Memory of Light, F #2, 13,021 votes)

Gentleman Bastards, Scott Lynch (The Republic of Thieves, F #3, 7,231 votes)

Old Man’s War, John Scalzi (The Human Division, SF #5, 4,301 votes)

2013:

The Dark Tower, Stephen King (The Wind Through the Keyhole, F #1, 8,266 votes)

Thursday Next, Japer Fforde (The Woman Who Died a Lot, F #2, 5,221 votes)

Sword of Truth, Terry Goodkind (The First Confessor, F #3, 4,510 votes)

Ender’s Shadow, Orson Scott Card (Shadows in Flight, SF #5, 3,416 votes)

Traitor Spy, Trudi Canavan (The Traitor Queen, F #6, 3,142 votes)

Note: I left out a Star Wars book (Darth Plagueis, SF #4, 4,584 votes; how do you handle multi-author series?), and you could certainly argue The First Confessor is a new Goodkind series, not a continuation of Sword of Truth. I haven’t read either, so I can’t meaningfully comment. The next series popping up are for Robin Hobb (do you count all her work as one series, or as a series of trilogies?) and James S.A. Corey.

2012:

A Song of Ice and Fire, George R.R. Martin (A Dance of Dragons, F #1, 8,530 votes)

Discworld, Terry Pratchett (Snuff, F #4, 2,479 votes)

Thursday Next, Japser Fforde (One of Our Thursdays is Missing, F #7, 1,584 votes)

Moirin Trilogy, Jacqueline Carey (Naamah’s Blessing, F #9, 1,391 votes)

WWW Trilogy, Robert Sawyer (Wonder, SF #12, 420 votes)

Note: Patrick Rothfuss’s The Wise Man’s Fear (Kingkiller Chronicle #2) is F #3 with 4,962 votes; given the length of The Kingkiller Chronicle books, this would probably be eligible under the 400,000 word proposal. There were not a lot of series in this year in the Goodreads votes for 2012. I think it’s faulty to assume that every year we have lots of volumes in long trilogies published. A real worry of the Best Saga would be that it wouldn’t be competitive every year, i.e. you might not have 5 good choices.

2011:

Wheel of Time, Robert Jordan and Brandon Sanderson (Towers of Midnight, F #1, 440 votes)

Time Travel, Connie Willis (Blackout SF #2, 334 votes)

The Black Jewels, Anne Bishop (Shalador’s Lady, F #8 286 votes)

Blue Ant Trilogy, William Gibson (Zero History SF #7, 213 votes)

Vorkosigan Saga, Lois McMaster Bujold (Cryoburn, SF #9, 188 votes)

Note: You can really see that how unpopular Goodreads was back in 2011; they increased their vote totals tenfold between 2011 and 2012. I also left off a Star Wars novel here.

Winners: Atwood, King, Martin, Jordan and Sanderson. It’s interesting how popular Atwood was, even if that popularity didn’t bleed over into WorldCon circles. Could you live with this set of winners?

This model looks less encouraging than the Locus Awards model. I think this is what many Hugo voters are afraid of: legacy series like Ender’s Game, Sword of Truth, or even Wheel of Time, showing up long after their critical peak has worn off (if Goodkind ever had a critical peak). Series can maintain their popularity and sales long after their innovation has vanished; readers love those worlds so much that they’ll return no matter how tired and predictable the books are. A 10 or 15 year series also has 10 or 15 years to pick up fans, and it might be harder for newer series by less-established authors to compete.

Still, even the Goodreads awards were not swamped by dead-man walking series, and the Hugo audience would probably trim some of these inappropriate works in their voting. It would be interesting to see someone like King win a Hugo for The Dark Tower; that’s certainly a very different feel than the current Hugos have.

This gets to the heart of the Saga problem: are you voting for the Saga when it was at its best, or for that specific novel? The first three Ice and Fire novels are some of the best fantasy novels of the 1990s; A Dance with Dragons is not at the same level. So what are you honoring? Martin when he was writing his best some 15 years ago? Or Maritn’s writing now? Same for Pratchett. I’m a strong supporter of Discworld as being one of the major fantasy series of the last 30 years, based on works such as Small Gods and Guards! Guards!. But is Snuff equally important

A Best Saga award would be an interestingly hybrid award, split between being a “career” honor and a “this year” honor. I think it would produce a lot of debate, and if that’s what you’re looking for in a Hugo—you’d have it with a Best Saga/Best Series award.

Modelling a Best Saga Hugo Award, Part 1

Recently, there’s been plenty of chatter in the blogosphere about the proposed Best Saga Hugo Award. You can go here to see the specific proposal that will be up for vote at Sasquan this year, but this is an idea that’s been buzzing around for years: many readers (most readers?) engage with SF and particularly Fantasy in the form of multi-volume series. The Hugo Best Novel award, on the other hand, steers away from ignore multi-volume works, privileging novels that are either the first in a series or stand-alones.

A Best Saga (or Best Series, or Best Continuing Series) Hugo would seek to solve that. While the current proposal makes you eligible after you publish 400,000 words in a series (how would readers know you’ve hit that word count? Why 400,000?) and once you get nominated you aren’t eligible again until you publish another 400,000 more, there are many ways to formulate a potential Best Saga award. I’ve heard proposals ranging from awarding it every 5 years, to only giving it once a series is finished, etc.

But what it such an award really look like? What kind of series would get nominated? Would it reward only famous authors? Would authors end up winning both the Best Novel and the Best Saga in the same year? Would this encourage publishers to publish more and longer series? Would ants destroy the earth? So many questions, so few answers.

I find it difficult to imagine an award in the abstract, so in this post and the next I’m going to model what a hypothetical Best Saga Hugo would look like for the past 4 years (2011-2014), using two different techniques to generate my model. First up, I’ll use the Locus Awards to model what the Best Saga would look like if voted on by SFF-insiders. Then, I’ll use the Goodreads Choice Awards to model what the Best Saga would look like if the Best Saga became an internet popularity contest. Looking at those two possible models should give us a better idea of how a Best Saga Hugo would actually play out. I bet an actual award would play out somewhere in the middle of the two models.

Lastly, I’m not messing with the complexities of the whole 400,000 word thing. In my model, I’m envisioning an extremely straightforward Best Saga award: your series is eligible once you published the THIRD novel in your series, and you are eligible again every time you publish a new NOVEL in your series.

First up, the Locus Awards Best Saga Model, 2011-2014. Since the 2015 Locus Awards come out this Saturday, I’ll update the model to include 2015 once the results are in.

Methodology: The Locus Awards are voted on by the subscribers of Locus Magazine and others on the internet to determine the Best SF and F novels of the year. They publish a Top 20 list for each genre, and their data is best looked at on the Science Fiction Awards Database. These are genre enthusiasts; if you have a subscription to Locus, you’re a very involved fan. These awards have historically been very closely aligned with the Hugo Award for Best Novel, often choosing the same books. The Locus Awards are friendlier to series and sequels than the Hugos, however.

To put my list together, I looked at the tops of the SF and F Locus Award lists for the year. Using the Internet Speculative Fiction Database, I checked to see if each of the winners was part of a series. If it was Volume #3 or later, it made my list. I went down both lists equally, and stopped once I found five series. The name of the series appears first, and the name of the individual book and it’s place in the Locus Awards comes after.

2014:

The Expanse, James S.A. Corey (Abaddon’s Gate, SF #1)

Oryx and Crake, Margaret Atwood (MaddAddam, SF #4)

Foreigner, C.J. Cherryh (Protector, SF #6)

Discworld, Terry Pratchett (Raising Steam, F #7)

The Dagger and the Coin, Daniel Abraham, (Tyrant’s Law, F #14)

2013:

Laundry Files, Charles Stross, (The Apocalypse Codex, F #1)

Culture, Iain M. Banks (The Hydrogen Sonata, SF #3)

Vorkosigan Saga, Lois McMaster Bujold (Captain Vorpatril’s Alliance, SF #4)

The Expanse, James S.A. Corey, (Caliban’s War, SF #5)

The Glamourist Histories, Mary Robinette Kowal (Glamour in Glass, F #5)

2012:

A Song of Ice and Fire, George R.R. Martin (A Dance with Dragons, F #1)

Zones of Thought, Vernor Vinge (Children of the Sky, SF #4)

Discworld, Terry Pratchett (Snuff, F #3)

The Inheritance Trilogy, N.K. Jemisin (The Kingdom of the Gods, F #6)

Jacob’s Ladder, Elizabeth Bear (Grail, SF #7)

2011:

Time Travel, Connie Willis (Blackout/All Clear, SF #1)

Vorkosigan Saga, Lois McMaster Bujold (Cyroburn, SF #2)

Laundry Files, Charles Stross (The Fuller Memorandum, F #3)

Culture, Iain M. Banks (Surface Detail, SF #4)

The Blue Ant Trilogy, William Gibson (Zero History, SF #5)

Projected Winners: Expanse, Laundry, Ice and Fire, Time Travel. Seems like a pretty credible list. Those have been some of the genre favorites of the last 4 years.

There were also some nice surprises. Iain M. Banks was only nominated for a Hugo once (for The Algebraist), but Culture showed up twice in this model. Culture was incredibly well-regarded, but also hard for new readers to jump into. I think Hugo voters felt it was unfair to ask someone to read Culture #7 or #8, and thus Banks didn’t get the nominations he deserved. Would a Best Saga award solve that problem?

Pratchett scored 2 nominations for Discworld, a nice end-of-the-career capper for his beloved fantasy series. There was a wide variety of authors in this model: Kowal, Jemisin, Gibson, Atwood, Bear, Abraham. Some super famous, some not. Maybe those would be squeezed out in an actual vote, but the Locus audience—and the Hugo audience—is fairly sophisticated. I find it hard to believe that the same Hugo voters who gave Connie Willis the Best Novel Hugo for Blackout/All Clear are going to turn around and vote Xanth or Drizzt as Best Saga every year. Who knows, though? These are only hypotheticals.

You only have one awkward year, with Willis predicted to win both Best Saga and Best Novel in 2011. Given that Willis has a staggering 11 Hugo wins already, I’m not sure that’s any more dominant than she’s already been. But I also think it possible that voters would have given Willis the Best Saga nod and someone else the Best Novel Hugo. Martin’s projected win in 2012 would be some much needed recognition for A Song of Ice and Fire: Martin’s decade-defining fantasy has only one Hugo win, a Best Novella for the Daenerys sections of A Game of Thrones. The Expanse winning is also nice: that’s become an incredibly popular and mainstream series, only to be bolstered by the TV showing coming out this December. By using the Best Saga award to honor such populist texts, would the Hugo increase its credibility?

It would probably take a few years for a Best Saga award to settle down, but this insider model shows some promise for such an award. What do you think of this projection? Would the WorldCon be satisfied with these kinds of nominees and winners?

Next time, we’ll imagine the Best Saga as an internet popularity contest using the Goodreads Choice Awards. Stay tuned for the terrifying results!

Hugo Award Nomination Ranges, 2006-2015, Part 4

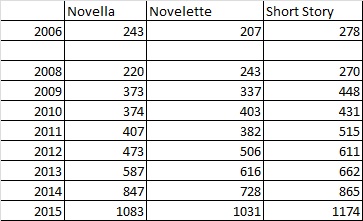

We’re up to the short fiction categories: Novella, Novelette, and Short Story. I think it makes the most sense to talk about all three of these at once so that we can compare them to each other. Remember, the Best Novel nomination ranges are in Part 3.

First up, the number of ballots per year for each of these categories:

Table 6: Year-by-Year Nominating Ballots for the Hugo Best Novella, Novelette, and Short Story Categories, 2006-2015

A sensible looking table and chart: the Short Fiction categories are all basically moving together, steadily growing. The Short Story has always been more popular than the other two, but only barely. Remember, we’re missing the 2007 data, so the chart only covers 2008-2015. For fun, let’s throw the Best Novel data onto that chart:

That really shows how much more popular the Best Novel is than the other Fiction categories.

The other data I’ve been tracking in this Report is the High and Low Nomination numbers. Let’s put all of those in a big table:

Table 7: Number of Votes for High and Low Nominee, Novella, Novelette, Short Story Hugo Categories, 2006-2015

Here we come to one of the big issues with the Hugos: the sheer lowness of these numbers, particularly in the Short Story category. Although the Short Story is one of the most popular categories, it is also one of the most diffuse. Take a glance at the far right column: that’s the number of votes the last place Short Story nominee has received. Through the mid two-thousands, it took in the mid teens to get a Hugo nomination in one of the most important categories. While that has improved in terms of raw numbers, it’s actually gotten worse in terms of percentage (more on that later).

Here’s the Short Story graph; the Novella and Novelette graphs are similar, just not as pronounced:

The Puppies absolutely dominated this category in 2015, more than tripling the Low Nom number. They were able to do this because the nominating numbers have been so historically low. Does that matter? You could argue that the Hugo nominating stage is not designed to yield the “definitive” or “consensus” or “best” ballot. That’s reserved for the final voting stage, where the voting rules are changed from first-past-the-post to instant-run-off. To win a Hugo, even in a low year like 2006, you need a great number of affirmative votes and broad support. To get on the ballot, all you need is focused passionate support, as proved by the Mira Grant nominations, the Robert Jordan campaign, or the Puppies ballots this year.

As an example, consider the 2006 Short Story category. In the nominating stage, we had a range of works that received a meager 28-14 votes, hardly a mandate. Eventual winner and oddly named story “Tk’tk’tk” was #4 in the nominating stage with 15 votes. By the time everyone got a chance to read the stories and vote in the final stage, the race for first place wound up being 231 to 179, with Levine beating Margo Lannagan’s “Singing My Sister Down.” That looks like a legitimate result; 231 people said the story was better than Lannagan’s. In contrast, 15 nomination votes looks very skimpy. As we’ve seen this year, these low numbers make it easy to “game” the nominating stage, but, in a broader sense, it also makes it very easy to doubt or question the Hugo’s legitimacy.

In practice, the difference can be even narrower: Levine made it onto the ballot by 2 votes. There were three stories that year with 13 votes, and 2 with 12. If two people had changed their votes, the Hugo would have changed. Is that process reliable? Or are the opinions of 2—or even 10—people problematically narrow for a democratic process? I haven’t read the Levine story, so I can’t tell you whether it’s Hugo worthy or not. I don’t necessarily have a better voting system for you, but the confining nature of the nominating stage is the chokepoint of the Hugos. Since it’s also the point with the lowest participation, you have the problem the community is so vehemently discussing right now.

Maybe we don’t want to know how the sausage is made. The community is currently placing an enormous amount of weight on the Hugo ballot, but does it deserve such weight? One obvious “fix” is to bring far more voters into the process—lower the supporting membership cost, invite other cons to participate in the Hugo (if you invited some international cons, it could actually be a “World” process every year), add a long-list stage (first round selects 15 works, the next round reduces those 5, then the winner), etc. All of these are difficult to implement, and they would change the nature of the award (more voters = more mainstream/populist choices). Alternatively, you can restrict voting at the nominating stage to make it harder to “game,” either by limiting the number of nominees per ballot or through a more complex voting proposal. See this thread at Making Light for an in-progress proposal to switch how votes are tallied. Any proposed “fix” will have to deal with the legitimacy issue: can the Short Fiction categories survive a decrease in votes?

That’s probably enough for today; we’ll look at percentages in the short fiction categories next time.

Hugo Award Nomination Ranges, 2006-2015, Part 3

Today, we’ll start getting into the data for the fiction categories in the Hugo: Best Novel, Best Novella, Best Novelette, Best Short Story. I think these are the categories people care about the most, and it’s interesting how differently the four of them work. Let’s look at Best Novel today and the other categories shortly.

Overall, the Best Novel is the healthiest of the Hugo categories. It gets the most ballots (by far), and is fairly well centralized. While thousands of novels are published a year, these are widely enough read, reviewed, and buzzed about that the Hugo audience is converging on a relatively small number of novels every year. Let’s start by taking a broad look at the data:

Table 5: Year-by-Year Nominating Stats Data for the Hugo Best Novel Category, 2006-2015

That chart list the total number of ballots for the Best Novel Category, the Number of Votes the High Nominee received, and the Number of Votes the Low Nominee (i.e. the novel in fifth place) received. I also calculated the percentage by dividing the High and Low by the total number of ballots. Remember, if a work does not receive at least 5%, it doesn’t make the final ballot. That rule has not been invoked for the previous 10 years of the Best Novel category.

A couple notes on the table. The 2007 packet did not include the number of nominating ballots per category, thus the blank spots. The red flagged 700 indicates that the 2010 Hugo packet didn’t give the # of nominating ballots. They did give percentages, and I used math to figure out the number of ballots. They rounded, though, so that number may be off by +/- 5 votes or so. The other red flags under “Low Nom” indicate that authors declined nominations in those year, both times Neil Gaiman, once for Anasasi Boys and another time for The Ocean at the End of the Lane. To preserve the integrity of the stats, I went with the book that originally was in fifth place. I didn’t mark 2015, but I think we all know that this data is a mess, and we don’t even really know the final numbers yet.

Enough technicalities. Let’s look at this visually:

That’s a soaring number of nominating ballots, while the high and low ranges seem to be languishing a bit. Let’s switch over to percentages:

Much flatter. Keep in mind I had to shorten the year range for the % graph, due to the missing 2007 data.

Even though the number of ballots are soaring, the % ranges are staying somewhat steady, although we do see year-to-year perturbation. The top nominees have been hovering between 15%-22.5%. Since 2009, every top nominee has managed at least 100 votes. The bottom nominee has been in that 7.5%-10% range, safely above the 5% minimum. Since 2009, those low nominees all managed at least 50 votes, which seems low (to me; you may disagree). Even in our most robust category, 50 readers liking your book can get you into the Hugo—and they don’t even have to like it the most. It could be their 5th favorite book on their ballot.

With low ranges so low, it doesn’t (or wouldn’t) take much to place an individual work onto the Hugo ballot, whether by slating or other types of campaigning. Things like number of sales (more readers = more chances to vote), audience familiarity (readers are more likely to read and vote for a book by an author they already like) could easily push a book onto the ballot over a more nebulous factor like “quality.” That’s certainly what we’ve seen in the past, with familiarity being a huge advantage in scoring Hugo nominations.

With our focus this close, we see a lot of year-to-year irregularity. Some years are stronger in the Novel categories, other weaker. As an example, James S.A. Corey actually improved his percentage total from 2012 to 2013: Leviathan Wakes grabbed 7.4% (71 votes) for the #5 spot in 2012, and then Caliban’s War 8.1% (90 votes) for the #8 spot in 2013. That kind of oddity—more Hugo voters, both in sheer numbers and percentage wise, liked Caliban’s War, but only Leviathan Wakes gets a Hugo nom—have always defined the Hugo.

What does this tell us? This is a snapshot of the “healthiest” Hugo: rising votes, a high nom average of about 20%, a low nom average of around 10%. Is that the best the Hugo can do? Is it enough? Do those ranges justify the weight fandom place son this award? Think about how this will compare to the other fiction categories, which I’ll be laying out in the days to come.

Now, a few other pieces of information I was able to dig up. The Worldcons are required to give data packets for the Hugos every year, but different Worldcons choose to include different information. I combed through these to find some more vital pieces of data, including Number of Unique Works (i.e. how many different works were listed on all the ballots, a great measure of how centralized a category is) and Total Number of Votes per category (which lets us calculate how many nominees each ballot listed on average). I was able to find parts of this info for 2006, 2009, 2013, 2014, and 2015.

Table 6: Number of Unique Works and Number of Votes per Ballot for Selected Best Novel Hugo Nominations, 2006-2015

I’d draw your attention to the ratio I calculated, which is the Number of Unique Works / Number of Ballots. The higher that number is, the less centralized the award is. Interestingly, the Best Novel category is becoming more centralized the more voters there are, not less centralized. I don’t know if that is the impact of the Puppy slates alone, but it’s interesting to note nonetheless. That might indicate that the more voters we have, the more votes will cluster together. I’m interested to see if the same trend holds up for the other categories.

Lastly, look at the average number of votes per ballot. Your average Best Novel nominator votes for over 3 works. That seems like good participation. I know people have thrown out the idea of restricting the number of nominations per ballot, either to 4 or even 3. I’d encourage people to think about how much of the vote that would suppress, given that some people vote for 5 and some people only vote for 1. Would you lose 5% of the total vote? 10%? I think the Best Novel category could handle that reduction, but I’m not sure other categories can.

Think of these posts—and my upcoming short fiction posts—as primarily informational. I don’t have a ton of strong conclusions to draw for you, but I think it’s valuable to have this data available. Remember, my Part 1 post contains the Excel file with all this information; feel free to run your own analyses and number-crunching. If you see a trend, don’t hesitate to mention it in the comments.

Declined Hugo and Nebula and other SFF Nominations

Since Chaos Horizon is a website dedicated to gathering stats and information about SFF awards, particularly the Hugos and Nebulas, a list of declined award nominations might prove helpful to us. There’s a lot of information out there, but it’s scattered across the web and hard to find . Hopefully we can gather all this information in one place as a useful resource.

So, if you know of any declined nominations—in the Hugos and Nebulas or other major SFF awards—drop the info on the comments. I have not included books withdrawn for eligibility reasons (published in a previous year, usually). I’ll keep the list updated and stash it in my “Resources” tab up at the top.

Declined Hugo Best Novel nominations:

1972 Best Novel: Robert Silverberg, The World Inside (source: NESFA.org’s excellent Hugo website; Silverberg allegedly declined to give his other Hugo nominated novel that year A Time of Changes a better chance)

1979 Best Novel: James Triptree, Jr., Up the Walls of the World (source: NESFA.org; I have no idea what the story is here)

1989 Best Novel: P. J. Beese and Todd Cameron Hamilton, The Guardsman (source: NESFA.org; Jo Walton has an interesting snippet on this from her Hugo series, noting that the book was disqualified because of block voting)

2005 Best Novel: Terry Pratchett, Going Postal (source: 2005 Hugo Page, nomination links at bottom, Pratchett’s statement that he just wanted to enjoy the event)

2006 Best Novel: Neil Gaiman, Anasi Boys (source: Gaiman’s statement, 2006 Hugo Page, nomination stats at bottom)

2014 Best Novel: Neil Gaiman, Ocean at the End of the Lane (source: 2014 Hugo Page, nomination stats at bottom)

2015 Best Novel: Larry Correia, Monster Hunter Nemesis (source: Correia’s website)

2015 Best Novel: Marko Kloos, Lines of Departure (source: Kloos’s website)

An interesting list. Anasi Boys might have won the 2006 Best Novel Hugo over Robert Charles Wilson’s Spin; Gaiman was incandescently hot at the time. I don’t think Pratchett would have won, as Going Postal isn’t his best work, and Jonathan Strange & Mr. Norrell was a sensation that year. Gaiman wasn’t going to beat Ann Leckie’s Ancillary Justice in 2014.

Declined Nebula Best Novel nominations:

2012 Best Novel: John Scalzi, Redshirts (source: I found this mention on Scalzi’s blog; search the comments for “Redshirts”)

2312 by Kim Stanley Robinson won in 2012; I think Redshirts would have been competitive, although the Nebulas have never been particularly friendly to Scalzi. I’m sure this has happened other times in the Nebula, but the Nebula is more of a closed-shop award, and they don’t publicize what happens behind the scenes as much as the Hugos.

Other Declined Nominations (story categories, other awards):

1971 Hugo Novella: Fritz Leiber, “The Snow Women” (source: NESFA.org; Leiber was up against himself this year, for the eventual winner “Ill Met in Lankhmar”)

1982 Nebula Short Story: Lisa Tuttle, “The Bone Flute” (source: Ansible; Tuttle said that she had “written to withdraw my short story from consideration for a Nebula, in protest at the way the thing is run, and in the hope that my protest might move the Nebula Committee to institute a few simple rules (like, either making sure that all items up for consideration are sent around to all the voters; or else disqualifying works which are campaigned for by either the authors or the editors) which would make the whole Nebula system less of a farce”; she still won, then refused the award)

1990 Hugo Novella: George Alec Effinger, “Marîd Changes His Mind” (sources: NESFA.org; I have no clue why)

1991 Hugo Novella: Lois McMaster Bujold, “Weathermen” (source: NESFA.org;I have no clue why; EDIT: Mark mentioned that the first six chapters of The Vor Game, which won the Best Novel Hugo that year, are a lightly modified version of “Weathermen”; perhaps Bujold withdrew for that reason)

2003 Hugo Novella: Ted Chiang, “Liking What You See: A Documentary” (source: NESFA.org; Chiang allegedly felt it didn’t live up to his best work; I’m also linking this Frank Wu article from Abyss & Apex because it has some more discussion of other declined Hugo noms in other categories)

2015 Hugo Short Story: Annie Bellet, “Goodnight Stars” (source: Bellett’s website)

I know I must have missed plenty—I’m not necessarily plugged in to the inner workings of the SFF world. What other authors have declined, and why?

Literary Fiction and the Hugo and Nebula Awards for Best Novel, 2001-2014

A sub-category of my broader genre study, this post addresses the increasing influence of “literary fiction” on the contemporary Hugo and Nebula Awards for Best Novel, 2001-2014. I think the general perception is that the awards, particularly the Nebula, have begun nominating novels that include minimal speculative elements. Rather than simply trust the general perception, let’s look to see if this assumption lines up with the data.

Methodology: I looked at the Hugo and Nebula nominees from 2001-2014 and ranked the books as either primarily “speculative” or “literary.” Simple enough, right?

Defining “literary” is a substantial and significant problem. While most readers would likely acknowledge that Cloud Atlas is a fundamentally different book than Rendezvous with Rama, articulating that difference in a consistent manner is complicated. The Hugos and Nebulas offer no help themselves. Their by-laws are written in an incredibly vague fashion that does not define what “Science Fiction or Fantasy” actually means. Here’s the Hugo’s definition:

Unless otherwise specified, Hugo Awards are given for work in the field of science fiction or fantasy appearing for the first time during the previous calendar year.

Without a clear definition of “science fiction or fantasy,” it’s left up to WorldCon or SFWA voters to set genre parameters, and they are free to do so in any way they wish.

All well and interesting, but that doesn’t help me categorize texts. I see three types of literary fiction entering into the awards:

1. Books by literary fiction authors (defined as having achieved fame before their Hugo/Nebula nominated book in the literary fiction space) that use speculative elements. Examples: Cloud Atlas, The Yiddish Policeman’s Union.

2. Books by authors in SFF-adjacent fields (primarily horror and weird fiction) that have moved into the Hugo/Nebulas. These books often allow readers to see the “horror” elements as either being real or imagined. Examples: The Drowning Girl, Perfect Circle, The Girl in the Glass.

3. Books by already well-known SFF authors who are utilizing the techniques/styles more commonplace to literary fiction. Examples: We Are All Completely Besides Ourselves, Among Others.

That’s a broad set of different texts. To cover all those texts—remember, at any point you may push back against my methodology—I came up with a broad definition:

I will classify a book as “literary” if a reader could pick the book up, read a random 50 page section, and not notice any clear “speculative” (i.e. non-realistic) elements.

That’s not perfect, but there’s no authority we can appeal to make these classifications for us. Let’s see how it works:

Try applying this to Cloud Atlas. Mitchell’s novel consists of a series of entirely realistic novellas set throughout various ages of history and one speculative novella set in the future. If you just picked the book up and started reading, chances are you’d land in one of the realistic sections, and you wouldn’t know it could be considered a SFF book.

Consider We Are All Completely Beside Ourselves, Karen Joy Fowler’s reach meditation on science, childhood, and memory. Told in realistic fashion, it follows the story of a young woman whose parents raised a chimpanzee alongside her, and how this early childhood relationship shapes her college years. While this isn’t the place to decide if Fowler deserved a Nebula nomination—she won the National Book Award and was nominated for the Booker for this same book, so quality isn’t much of a question—the styles, techniques, and focus of Fowler’s book are intensely realistic. Unless you’re told it could be considered a SF novel, you’d likely consider it plain old realistic fiction.

With this admittedly imperfect definition in place, I went through the nominees. For the Nebula, I counted 13 out of 87 nominees (15%) that met my definition of “literary.” While a different statistician would classify books differently, I imagine most of us would be in the same ball park. I struggled with The City & The City, which takes place in a fictional dual-city and that utilizes a noir plot; I eventually saw it as being more Pychonesque than speculative, so I counted it as “literary.” I placed The Yiddish Policeman’s Union as literary fiction because of Chabon’s earlier fame as a literary author. After he establishes the “Jews in Alaska” premise, large portions of the book are straightly realistic. Other books could be read either as speculative or not, such as The Drowning Girl. Borderline cases all went into the “literary” category for this study.

Given that I like the Chabon and Mieville novels a great deal, I’ll emphasize I don’t think being “literary” is a problem. Since these kinds of books are not forbidden by the Hugo/Nebula by-laws, they are fair game to nominate. These books certainly change the nature of the award, and there are real inconsistencies—no Haruki Murakami nominations, no The Road nomination—in which literary SFF books get nominated.

As for the Hugos, only 4 out of 72 nominees met my “literary” definition. Since the list is small, let me name them here: The Years of Rice and Salt (Robinson’s realistically told alternative history), The Yiddish Policeman’s Union, The City & The City, and Among Others. Each of those pushes the genre definitions of speculative fiction. Two are flat out alternative histories, which has traditionally been considered a SFF category, although I think the techniques used by Robinson and Chabon are very reminiscent of literary fiction. Mieville is an experimental book, and the Walton is a book as much “about SFF” as SFF. I’d note that 3 of those 4 (all but the Robinson) received Nebula nominations first, and that Nebula noms have a huge influence on the Hugo noms.

Let’s look at this visually:

Even with my relatively generous definition of “literary,” that’s not a huge encroachment. Roughly 1 in 6 of the Nebula noms have been from the literary borderlands, which is lower than what I’d expected. While 2014 had 3 such novels (the Folwer, Hild, and The Golem and the Jinni), the rest of the 2010s had about 1 borderline novel a year.

The Hugos have been much less receptive to these borderline texts, usually only nominating once the Nebula awards have done. We should note that both Chabon and Walton won, once again reflecting the results of the Nebula.

So what can we make of this? The Nebula nominates “literary” books about 1/6 times, or once per year. The Hugo does this much more infrequently, and usually when a book catches fire in the Nebula process. While this represent a change in the awards, particularly the Nebula, this is nowhere as rapid or significant as the changes regarding fantasy (which are around 50% Nebula and 30% Hugo). I know some readers think “literary” stories are creeping into the short story categories; I’m not an expert on those categories, so I can’t meaningfully comment.

I’m going to use the 15% Nebula and 5% Hugo “literary” number to help shape my predictions. I may have been overestimating the receptiveness of the Nebula to literary fiction; this study suggests we’d see either Mitchell or Mandel in 2015, not both. Here’s the full list of categorizations. I placed a 1 by a text if it met the “literary” definition: Lit Fic Study.

Questions? Comments?

Best of 2014: Updated SFF Critics Meta-List

Hot off the presses is my newly collated SFF Critics Meta-List! This list includes 8 different “Best of 2014” lists, all by outlets that have a reasonable chance of either reflecting or influencing the Hugo/Nebula awards.

Currently included: Coode Street Podcast, io9, SF Signal, Strange Horizons, Jeff VanderMeer writing for Electric Literature, Adam Roberts writing for The Guardian, Tor.com, and a A Dribble of Ink. The lists were chosen because of their reach and previous reliability in predicting the Hugos/Nebulas (Tor, io9); the fame of the authors (VanderMeer, Roberts); or because the website/fancast has been recently nominated for a Hugo (Dribble, Strange Horizons, SF Signal, Coode Street). Any comments/questions about methodology are welcome.

Points: 1 point per list, unless the list is a collation of more than 3 critics (SF Signal, Strange Horizons, Tor.com). In that case, books can grab a maximum of 2 points, pro-rated for # of mentions on the list. See here for an explanation of this methodology.

6.5: Ancillary Sword

6: Annihilation

5: The Goblin Emperor

4.5: The Magician’s Land

4: Broken Monsters

4: The Bone Clocks

3.5: City of Stairs

3: The First Fifteen Lives of Harry August

3: The Girls at the Kingfisher Club

3: The Girl in the Road

3: Lagoon

3: All Those Vanished Engines

3: The Peripheral

3: The Three-Body Problem

2.25: The Race

2: Tigerman

2: Steles of the Sky

2: Our Lady of the Streets

2: Nigerians in Space

2: Europe in Autumn

2: A Man Lies Dreaming

2: Station Eleven

2: The Martian

2: Half a King

2: The Causal Angel

2: Wolves

2: The Book of Strange New Things

2: The Memory Garden

2: My Real Children

Pretty much what I expected. The SFF world is often very repetitive (nominating the same authors over and over again), so Leckie makes sense at #1. She was so talked about for last year’s award she’s a natural for this year, even if people are less excited about Ancillary Sword. VanderMeer, Mitchell, and Bennett are no surprise near the top.

Addison’s The Goblin Emperor is doing well, particularly when the lists are a little more fan oriented. She does represent a methodological problem: she has 2 points from SF Signal and 2 points from Tor.com, as well as 1 point from Dribble of Ink. That’s concentrated, not broad support. In contrast, Leckie’s 6.5 points are spread out across 6 venues. If I gave 1 point max per venue, Addison would be knocked down to only 3 points. I’ll keep my eye on the math of this, and make adjustments to my counting as necessary. Remember, the goal is to be predictive, not perfect. We can just wait until the Nebula noms come out, and then reassess at that point.

In terms of Addison’s chances: secondary world fantasy is not an easy sell to the Nebula voters. I wouldn’t be shocked to see her on the slate, but I also wouldn’t be surprised if she missed. Given the way that the Nebula slate shapes the Hugo slate, her Hugo chances are closely tied to whether or not she grabs a Nebula nom.

Lev Grossman poses something of a problem. The Magicians trilogy is very well regarded, and books like this (fantasy that crosses over into the real world) have done well in the Nebulas as of late. Still, the last books of trilogies have usually NOT been part of the Hugo/Nebula process; it’ll be interesting to see if that bias continues.

Beukes is something of a surprise with Broken Monsters. A serial-killer novel that crosses over into a supernatural text in it’s last 50 pages, I’m not sure it’s speculative enough to grab an award nomination. Beukes almost made the Hugo slate last year, so don’t count this one out.

Some lesser known works, at least to Americans: Lagoon (no U.S. release, so Nebula eligibility is unlikely), All Those Vanished Engines by Paul Park (not very hyped in the U.S.), The Race by Nina Allan (released in the U.S., but not widely known over here). It’ll be interesting to see if these works continue to be part of the conversation.

The snubs: Station Eleven isn’t taking this list by storm. That might reflect a simple timeline problem: the Mandel came out late in the year, so people might only be getting to read it now. The Martian is also way down, but is that because it wasn’t a 2014 book?

I’d still like to add several more lists to this collation to see if we get a better convergence. Locus Magazine will have their “Best of 2014,” and I’m waiting for several Hugo nominated blogs to get their lists out (Book Smugglers, Elitist). Anyone else you would suggest for this collation?

Here’s the data: Best of 2014. This list is on the second tab.

Best of 2014: Coode Street Podcast

As I’m putting together my “SFF Critics Best of 2014 Meta-List,” I’ve been trying to find lists that are likely to be reflective of the Hugo/Nebula voters. I don’t want to be mired in “old-media,” so I thought I better some “Best of 2014” podcasts to include.

The Coode Street Podcast, by Gary K. Wolfe and Jonathan Strahan, has twice been nominated for the “Best Fancast” Hugo Award. Wolfe is a prominent reviewer for Locus Magazine, and Strahan a frequent editor, including for the Best Science Fiction and Fantasy of the Year series. Probably good voices to listen to.

With guest author James Bradley, they recently put up a “Best of 2014” podcast. It’s an hour discussion, and ranges over a large number of important works from 2014. Here’s the list of what they identify as the best of the year:

Wolves, Simon Ings

The Magician’s Land, Lev Grossman

The Bone Clocks, David Mitchell

Clariel, Garth Nix

Beautiful Blood, Lucius Shepard

The Memory Garden, Mary Rickert

Academic Exercies, K.J. Parker

Stone Mattress, Margaret Atwood

Lagoon, Nnedi Okorafor

Half a King, Joe Abercrombie

Bathing the Lion, Jonathan Carroll

Bete, Adam Roberts

The Peripheral, William Gibson

The Girls at the Kingfisher Club, Genevieve Valentine

My Real Children, Jo Walton

The Blood of Angels, Johanna Sinisalo

All Those Vanished Engines, Paul Parks

The Book of Strange New Things, Michel Faber

Consumed, David Cronenberg

Annihilation, Jeff VanderMeer

The Girl in the Road, Monica Byrne

Ancillary Sword, Ann Leckie

Echopraxia, Peter Watts

The Causal Angel, Hanuu Rajaniemi

Orfeo, Richard Powers

The Three-Body Problem, Cixin Liu

Questionable Practices, Eileen Gunn

Proxima, Stephen Baxter

The Race, Nina Allan

Crashland, Sean Williams

More international than most lists, and this bring up an interesting point: major SF novels are getting published in England that aren’t getting published in the US. Lagoon, for instance, would be in the award mix if it had received as US publication. Without that, though, you’re cutting off too much of your potential audience (and probably aren’t even eligible for the Nebula). Books like Wolves or even Europe in Autumn (which was published here but not really marketed) might be worthy of award consideration, but losing over half their potential audience is going to make a Hugo or Nebula nomination next to impossible.

Coode street touches on many of the major candidates, and I found their framing of the year in SF quite useful. Coode Street is more interested in SF than in Fantasy, and they don’t discuss some of the fantasy candidates (such as City of Stairs or Goblin Emperor). By having a large number of lists, these genre imbalances should work themselves out.

I’ll update and post the Meta-List later today.

Blank Data Sets for the Hugo and Nebula Awards for Best Novel

I’m doing some organizing work here at Chaos Horizon, so let me put up something I’ve been meaning to for a while: blank data sets for the Hugo and Nebula Awards for Best Novel, from the beginnings to the present. These are Excel formatted lists of the Hugo and Nebula winners + nominees, sorted by year and author. It was a pain to put these together, but now that they’re cleanly formatted I wanted to share them with the community.

So long story short, anyone who wants to do their own statistical study of the Hugo and Nebulas is free to use my worksheets. Excel is a powerful tool, and given the relatively small size of the data sets—311 Nebula nominees, 288 Hugo nominees—it isn’t too hard to use. With only a little amount of work—and data entry—you can be generating your own tables and graphs in no time. I’m also somewhat confident Google Docs can work with these, although I never use Google Docs myself.

The guiding principles of Chaos Horizon have always been neutrality and methodological/data transparency. Statistics are at their most meaningful when multiple statisticians are working on the same data sets. There’s lot of information to be sorted through, and I look forward to other what statisticians will find. If you do a study, drop me an e-mail at chaoshorizon42@gmail.com or link in the comments.

Here’s the Excel File: Blank Hugo and Nebula Data Set. I’ll also perma-link this post under “Resources.”

Have fun!