2014 Nebula Award Prediction: Indicator #10

This is more of a speculative indicator: surely the kind of rankings a book receives (both in terms of how many rankings and total score) gives us some indication of how it’ll do. I’m thinking specifically of the rankings on Amazon and Goodreads as solid indicators of public reaction to a text. Take a look at the Amazon ranking of Martin’s A Game of Thrones (4.4) versus A Feast for Crows (3.6). The first book is widely beloved; the fourth in his series is considered a disappointment. That’s just an example to show how different such scores can be varying on whether the book is liked or not. Presumably, a book has to be well liked to win the Nebula.

Unfortunately, there’s no historical data for this, as we can’t go back in time and see how the Nebula nominees were ranked/scored on Goodreads or Amazon when they were nominated. After the nominations–and particularly the winner–comes out, this drives new readers to the books, which taints any statistical significance those rankings might have.

Nonetheless, we can start collecting data and see if any correlation show up in future years, thus 2015 and beyond. Right now, the weight of Indicator #10 will be 0, as there is not enough data to be reliable or meaningful yet.

Goodreads and Amazon provide us with two useful reader rankings: number of rankings (showing how many people have read the book), and then an actual score (whether they liked the book). More readers + more positive reaction has to equal more wins, doesn’t it?

Indicator #10: The novel is frequently reviewed and highly scored on Goodreads and Amazon.

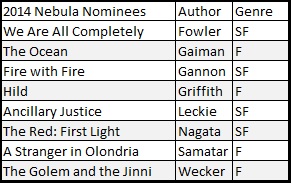

Let’s take a look at this year’s nominees, as of right after the nominations:

Where AMZ = Amazon, and GR = Goodreads.

What does this data mean? Who knows? A couple things seem clear: the easiest way to get high scores is to not be rated much. I suspect some kind of correlation can be worked up, but we don’t have enough data to make that clear. Interestingly, our frontrunners (Gaiman and Leckie) are middle of the pack when it comes to scores, but Gaiman obviously has a clear advantage to how often his book has been read. For all we know, the most read book every year might win the Nebula. We’ll have to wait for some more years of data to see.

2014 Nebula Prediction: Indicators #8 and #9

Now we’re on to the critical reception of these books. Critics play an important role in bringing attention to novels, and give us a good indication as to whether or not these books are well respected. While the internet does provide year end critics’ lists, these are a relatively new phenomena, and many of them don’t have long histories.

I took a look at a number of possible year end critics lists. Locus Magazine’s Recommended Reading was by far the best of the bunch. It has a long history, and the eventual Nebula Winner has appeared on those lists 12/13 times since 2000, with the only exception being Asaro’s win for The Quantum Rose in 2002.

Beyond that list, nothing has the same kind of reliability or history. I looked at the NPR and Publisher’s Weekly lists, but the Nebula winners haven’t been consistently appearing on their SF/F lists. A couple online blogs are more promising: Tor.com and io9.com, but these don’t have long histories. io9 has three years of history (including this year), and Tor.com only two. Still, they’ve managed to include the winners on their list, so that’s something. What I’m going to do is give a low weight to this indicator and see if it improves over time.

Indicator #8: Nominee appears on the Locus Magazine Recommended Reading List (92.3%)

Indicator #9: Nominee appears on the Tor.com or io9.com Year-End Critics’ list (100%, but low weight due to lack of history)

So where does that put us for this year?

As we might expect from previous indicators, Gaiman and Leckie rise to the top, appearing on all the recommended lists for the year. Everyone else was divided. Fowler, Griffith, and Wecker likely missed some lists because of the lack of genre elements in their work, not the quality of their writing. This same confusion over genre, however, will likely extend to the voters and hurt them in the final tally.

Once again, two more indicators clearly “won” by Gaiman and Leckie.

2014 Nebula Prediction: Indicators #6 and #7

So far, we’ve just looked at awards history. Now, we need to look at how the books have been received. Indicators #6 and #7 use some of the popular “Best Book of the Year” votes as an indicator. If a book isn’t well known, it likely won’t receive the most votes for the Nebula award.

The Locus Awards are one of the longest running and most reliable reader polls out there. It’s a well informed audience, and their track record is strong. Of the last 13 years, since 2000, all but one eventual Nebula winner has made the Locus Award list. These are lists of the top 20 novels of the year in a variety of genres (Science Fiction, Fantasy, Young Adult, First Novel, etc.). It doesn’t seem to matter where you place on the lists–we’ve had novels that have been in 1st place, novels in 15th place, novels on the Young Adult list. You just have to show up somewhere.

Unfortunately for us, the Locus Award results came out later in the year, so they won’t be available for some time.

Although it lacks the long history of the Locus, the Goodreads Choice Awards attracts tons of voters, and gives us perhaps the best sense of the “general public’s” reaction to a book. Although only in place since 2009 (4 years data), every Nebula award winning book has placed on either the Goodreads SF or F year end Reader’s Top 20 list. Who knows if this will continue in the future–we can address that by proper weighting–but it seems like a good indicator for right now.

So, that leaves us with these two Indicators:

Indicator #6: Nominee places in the Locus Awards. (92.3%)

Indicator #7: Nominee places in the Goodreads Choice Awards. (100%)

So, how did this year’s nominees fare in the Goodreads Choice Awards?

A pretty telling chart. Most of our books this year were not all that popular. Gaiman and Wecker broke through to the mainstream, but everyone else languished. I think this indicates some real problems for our other strong contenders (Fowler, Griffith). Did enough genre readers pick up their books? Even Leckie comes across poorly. The disparity between Gaiman, with more than 40,000 votes, and Leckie, who pushes a little over 300, is staggering.

Another category that gives Gaiman a clear win, and one that’ll push Wecker up in the formula.

2014 Nebula Prediction: Indicator #5

This is a simple one: is the Nebula Award for Best Novel biased towards science fiction or fantasy?

The SFWA (Science Fiction and Fantasy Writers) began as a SF organization, and it still prefers SF titles in these awards. Since 2000, SF novels have won 9/13 times, for a robust 69.2%. If you include the previous 20 years (1980-1999), SF novels have won 26/33 times, for a staggering 78.8%. We can even see this bias last year, when 2312 won over 5 fantasy novels.

Interestingly, fantasy novels don’t suffer in terms of nominations. Since 2000, there are 36 F noms versus 43 SF noms. While you could quibble probably with the categorization of a couple novels, this is roughly equal. Even this year we have 4 fantasy novels squaring off against 4 SF novels.

So, that makes:

Indicator #5: Nominee is a science fiction novel (69.2%).

This year is an interesting mix of fantasy and science fiction nominees:

This is the first time Gaiman doesn’t have a clear advantage. While there are 4 science fiction novels, Fowler’s book pushes the limits of genre. If the SF voting crowd is looking for an alternative, they might coalesce around Leckie.

2014 Nebula Prediction: Indicators #3 and #4

Indicators #1 and #2 looked at nomination history. Let’s move on to winning. If you’ve won previously, does that increase your chances of winning again?

This wasn’t as straightforward as I would have liked. Winning a Nebula was not necessarily a ticket to winning another. 7 of 13 authors who won the Nebula for Best Novel since 2000 were winning it for the first time. Oddly, the other 6 were winning at least their second Nebula for Best Novel. So, I discarded the idea of previously winning as a meaningful indicator, and instead integrated the idea of “Previously had Won a Nebula Award for Best Novel.”

The more correlated category–and I parsed through Hugo Wins, Hugo + Nebula Wins, and so forth–seemed to be how honored you’d been over your career as a writer, defining this as the sum of Nebula Win + Nominations + Hugo Wins + Nominations. This gave a good measure of the profile of an author, and the most honored nominee won a strong 53.9% of the time, with little statistical significance to your winning chances based on placing 2, 3, 4, or below on this ranking.

Both of these pass the eye test, although they are probably a little too dependent on each other. However, in a Linear Opinion Pool, this dependence can be taken care of with proper weighting. So the Indicators worked out like this:

Indicator #3: Has previously won a Nebula award for best novel (46.1%)

Indicator #4: Was the most honored nominee (Nebula Wins + Nominations + Hugo Wins + Nominations) (53.9%)

Where does that leave this year’s nominees?

Gaiman and Fowler far outstrip the other nominees in terms of career honors. Fowler even beats Gaiman on the Nebula nomination front, although Gaiman pulls ahead when the Hugo is factored in.

Gaiman and Griffith have previously won the Nebula for Best Novel. Griffith’s previous win should get Hild a good look by a lot of readers. Gaiman likely doesn’t need more eyes on Ocean, but he is undeniably well known to this voting audience.

2014 Nebula Prediction: Indicators #1 and #2

By looking at previously Nebula winners and their award history, it was easy to come up with some strong indicators. Of the past 13 years (back until 2000), 11 out of 13 winners had previously been nominated for a Nebula. This makes sense; to be competitive for this award, voters need to know who you are. Previous nominations mean direct exposure to the voting pool, and thus voters are more likely to know those authors, to have read their books, and to vote for them.

Likewise, being previously nominated for a Hugo is a strong predictor, with 10 out of 13 winners having at least one previous Hugo nomination. It’s not as strong, but then why should the Nebula voters listen to the Hugo voters?

Interestingly, prior nominations was more reliable than the broader category of “prior wins.” This may have something to do with the number of different Nebula and Hugo categories.

That’s a strong correlation, and it actually is even stronger than that. Some of the people that snuck wins without many prior nominations were huge names: Neil Gaiman for American Gods in 2003 and Michael Chabon for Yiddish Policeman’s Union in 2008. Gaiman was incredibly well known for his comic Sandman, and did have a Nebula nominee for some of his film work. Chabon had previously won the Pulitzer Prize, and was a widely acclaimed author from the field of literary fiction. Their winning books got huge marketing pushes, and were some of the most widely buzzed about texts of that year. Don’t worry: later indicators are going to take that fame and buzz into account.

But we’re looking at only prior nomination history in Indicators #1 and #2. In fact, Chabon is the only author in the past 13 years to win without at least one prior Hugo or Nebula nomination.

What are statistics, though, without some outliers?

The lesson: you better be somewhat known if you want to win the Nebula. The safest route is to have already been nominated for a Nebula (winning doesn’t actually mean as much, although that will factor in Indicators #3 and #4). Second best is to be nominated for a Hugo. Failing that, win a Pulitzer.

So, to put this in mathematical terms:

Indicator #1: Has previously been nominated for a Nebula.

(84.6%)

Indicator #2: Has previously been nominated for a Hugo.

(76.9%)

Those numbers aren’t as good as they look, because every year multiple authors have prior nominations, so they have to split up that 84.6% or 76.9%. However, let’s look at this year’s nominees:

N. Wins stands for prior Nebula wins, N. Nominations for prior Nebula Nominations, H. Wins for prior Hugo wins, and H. Noms for prior Hugo nominations.

The analysis is pretty easy here. Our prior Nebula nominees–Gaiman, Fowler, Griffith, Nagata, and Samatar–come out ahead of the newbies Gannon, Leckie, and Wecker. Fowler and Gaiman look particularly impressive considering their number of wins and nominations.

The Hugo nominees make the difference between our well-known and emerging authors even more apparent. Only Gaiman, Fowler, and Griffith have previously been nominated for the Hugo.

A safe bet, then, based solely on these two indicators, would be that either Gaiman, Fowler, or Griffith would win. So, from Indicators #1 and #1 alone, those three pull out into the lead.

From a methodology standpoint, I convert the overall chances (that 84.6% versus 15.4% number), and then distribute that over the various nominees. Our Nebula prediction table comes out like this:

Chances to Win Based Solely on Indicator #1: Previously Nominated for Nebula

Fowler: 16.9%

Gaiman: 16.9%

Gannon: 5.2%

Griffith: 16.9%

Leckie: 5.2%

Nagata: 16.9%

Samatar: 16.9%

Wecker: 5.2%

Those odds are considerably different than the “drawing out of a hat” odds of a flat 12.5% each. Hugo odds are similar, but even better for the winners, with Fowler, Gaiman, and Griffith having a robust 25.6% each, and the rest ringing in with a paltry 4.6%.

2014 Nebula Prediction: Methodology and Math

To predict future Nebula wins on past Nebula patterns, let’s note several things:

1. We don’t have much data: The Nebula does not release voting numbers (the Hugo does), so we don’t have any sense of who finished second versus sixth. This makes it harder to predict.

2. The data is unreliable: Nebula voting patterns have changed a great deal over the past 20-30 years. While the Nebula goes back to 1966, can we really use what happened in the 1970s–back before Fantasy was part of the SFWA, and the whole field of SF was much smaller and more insular–to predict an award in 2014?

3. The Nebula is erratic: There have been some rather unpredictable Nebula awards in the past 15 years, with the award going to lesser known books like The Quantum Rose or The Speed of Dark. Good for them, but bad for a predictive model.

So, what does that mean? It means that we don’t have enough reliable information to run some of the more complex statistical models (i.e. the kind of Bayesian models made popular by people like Nate Silver).

I looked over several possible statistical modeling methodologies, and I decided, at least for the purpose of this blog, that a Linear Opinion Pool makes the most sense. Frequently used in risk management, a Linear Opinion Pool is a way of aggregating expert opinions (predictions) and then weighting them to come up with a predictive average. Crudely, here’s what it looks like:

Final % of a Book Winning the Award = Expert #1’s % Guess * Weight for Expert #1 + Expert #2’s % Guess * Weight for Expert #2 + Expert #3’s % Guess * Weight for Expert #3, and so on.

Here, the Weight is a measure of how reliable we think each expert is, and all those weights have to add up to 1.

Bored? It gets worse. Analogy time! Imagine a game of dice with a standard six sided dice. If we believe the dice aren’t fixed, then the % for any given number 1-6 is the same, a simple 16.67%. That would be the chance of correctly picking the Nebula winner if we just drew the name out of a hat.

Now, we know that the Nebula dice are weighted–certain numbers have a better chance of coming up than others. This is where are experts come in. Let’s say one expert watches the game for a while, and then decides that the dice are heavily weighted in favor of the numbers 1 and 2. He then draws up a nice table for us:

Chance of Rolling a #1: 20%

Chance of Rolling a #2: 20%

Chance of Rolling a #3: 15%

Chance of Rolling a #4: 15%

Chance of Rolling a #5: 15%

Chance of Rolling a #5: 15%

We have three things we could do here. We could roll the dice a bunch of times and then check to see if he was correct. We can only do that, though, if we have access to the dice. Second, we could trust the expert, and use his percentages to make a bet. Lastly, we could reject the expert, and go back to our simple 16.7% equal chances.

What if we have more than one expert? This new expert thinks the game is more heavily fixed towards rolling a #1. His probability chart comes out like this:

Chance of Rolling a #1: 25%

Chance of Rolling a #2: 15%

Chance of Rolling a #3: 15%

Chance of Rolling a #4: 15%

Chance of Rolling a #5: 15%

Chance of Rolling a #6: 15%

Who do we trust? If we trusted them equally, we could average the two probabilities to come up with a 22.5% chance of rolling a 1. A Linear Opinion Pool allows us, though, to trust one expert more than another. So let’s say I trust Expert #1 90% and Expert #2 10% because Expert #2 is drunk, I could then compute an average of .9*20+.1*25 = 20.5% chance of rolling a 1.

This is the model I’m going to use to predict the Nebula, with 14 different “Experts” providing probability tables from data mining previous award results and predictors.

While I can get more into the math if anyone wants, let’s run down the advantages of a Linear Opinion Pool:

1. The math is easy to understand and transparent, unlike more complex statistical measures.

2. The formula can be easily tweaked by changing the weights for the experts.

3. Unreliable data can be tossed out more easily because we can see if parts of the formula are messing everything up.

4. Expert probabilities don’t rely on the same kind of enormous data sets other statistical models do.

5. Since the Nebula award is inherently erratic, this doesn’t hypothesize the process is more predictable than it actually is.

So, the next steps are to start building Probability Tables based on past Nebula results. After that, we have to weight them. Then, we finalize the prediction. Onward!

2014 Nebula Prediction Version 1.0

Let’s get straight to the initial list:

1. Neil Gaiman, The Ocean at the End of the Lane (21.7%)

2. Ann Leckie, Ancillary Justice (13.7%)

3. Karen Joy Fowler, We Are All Completely Beside Ourselves (13.6%)

4. Nicola Griffith, Hild (12.2%)

5. Helene Wecker, The Golem and the Jinni (11.9%)

6. Linda Nagata, The Red: First Light (11.5%)

7. Sofia Samatar, A Stranger in Olondria (10.8%)

8. Charles E. Gannon, Fire with Fire (4.6%)

No real surprises. Gaiman is the clear favorite: a genre superstar writing a blockbuster novel that critics and readers loved. Who would have thought it? Ancillary Justice might be higher than you’d expect. My guess is that Leckie will emerge as the clear SF alternative to Gaiman, and we can’t ignore the Nebula’s bias towards SF writers. While Leckie lacks any Nebula history, her book did very well on year-end votes and critics’ lists. Fowler and Griffith did well given their award history but the reception to their books was mixed, largely because of their lack of strong genre elements. They particularly did poorly on voted (readers’) lists.

There’s still several more parts of the formula to be integrated and weighted, and some big indicators are on the horizon: the Hugo nominations and the Locus Readers’ Poll will be coming out later in the year. More discussion of methods to come, and then I’ll get into the formula.

Nebula 2014: Initial Methodology

So, how do we predict an award?

We might find out who has received the award in the past and look for patterns. We could then translate those patterns into probabilities, and then weigh those various probabilities to come up with a predictive model. By testing that predictive model against past award winners, we could then tweak the model until it becomes somewhat–and only somewhat–reliable. Then, as new data comes out with new awards in future years, we could keep improving the model until we have something accurate.

Don’t worry, if you’re looking to be bored, I’ll get into the math later. For right now, what predictors are reliable for the Nebula?

On first glance, a couple starting points are obvious:

1. Previous award history: Does the Nebula has a tendency to honor people who have already been nominated for the Nebula or Hugo? Does it like repeat winners?

2. Genre: Is the Nebula biased towards fantasy or science fiction? If so, by how much?

3. Popularity: For someone to vote for a novel, they probably (hopefully!) have read it. Do the most popular novels win the Nebula? How would we measure popularity? Sales? Ratings? Votes?

4. Critical acclaim: Does the Nebula go to the most acclaimed novel of the year? How would we measure acclaim? Year end lists? Other award nominations?

If we were able to come up with 10-15 different predictors, then weight those to properly match the past 10-15 Nebula winners, we’d have a good start at an effective model.

I’ve already done a lot of this work, and I’ll be introducing the model and the first prediction over the next couple days.

Nebula 2014: Initial Analysis

It’s an intriguing set of texts this year: a heavyweight (Gaiman), two respected SFF authors pushing the limits of genre (Fowler, Griffith), a well-regarded SF debut (Leckie), and an interloper from the literary fiction world (Wecker). Rounding out the large slate (8 instead of the usual 6) are three lesser known writers (Gannon, Samatar, and Nagata) who don’t stand much of a chance of winning. Such nominations play an important role, though, of bringing attention to lesser known texts. Drawing attention to emerging authors is a key aspect of any award, and the Nebula often pushes up 1-2 such authors in their nomination process–even if they rarely, if ever, win. Nebula staples like Mieville and Jemisin both got their start with these kind of nominations.

I see this as breaking down to a three horse race between Gaiman’s The Ocean at the End of the Lane (the early favorite), Fowler’s We Are All Complete Besides Ourselves (would have been my favorite if it had more explicit genre elements), and Ann Leckie’s Ancillary Justice (the more traditional SF alternative). I think Griffith’s Hild is too long and not “genre-y” enough, and Wecker’s The Golem and the Jinni too mainstream for the SFWA audience.

Stay tuned for the first official prediction!